Investigation

Andreessen Horowitz is shaping AI policy — while investing in a bleak vision of the future

The firm’s investment portfolio is full of companies that have exploited legal loopholes, created disturbing products, and broken the law.

By

Tyler Johnston

-

Jan 8, 2026

Marc Andreessen wants to shape US AI policy. The venture capital firm he co-founded and runs, Andreessen Horowitz (abbreviated “a16z”), is a major player in the development of new tech startups.

These startups include:

A bot farm of fake accounts, tricking people and social media platforms into thinking AI-generated ads are posted by real people

An AI company that wants to normalize cheating on dates, job interviews, and tests with AI

AI companion apps linked to suicide and disturbing behavior toward children

A platform hosting thousands of deepfake models — 96% targeting identifiable women — that have been used to create AI-generated content sexualizing children

Gambling platforms that attempt to subvert existing laws and target vulnerable users

Fintech companies implicated in fraud and illegality

Many of these companies knew the rules and broke them anyway — or designed products specifically to exploit gaps in consumer protection. The firms profited, and the public paid the costs.

There’s a growing public desire to rein in tech companies and regulate AI, so a16z is spending tens of millions of dollars to shape the development of AI policy. The firm helped launch a $100 million super PAC, saw former partners take key government roles, and successfully pushed for an executive order attempting to undermine state AI laws. The partners want to set the rules of the road, even as they’re already driving recklessly.

What follows is The Midas Project's survey of 18 of Andreessen Horowitz's most notorious investments. This isn’t a comprehensive overview of the firm’s larger portfolio, but it indicates a pattern of behavior — one comprising hundreds of millions of dollars of investment by a16z.

These investments reveal the lines that a16z is willing to cross and how the lax regulatory environment that they favor would benefit the firm’s bottom line.

A16z did not respond to a request to comment for this report.

Deception and manipulation

A16z has invested in products designed for mass deception. Even if these tactics don’t explicitly violate the law, they can be corrosive to society.

As technology like advanced AI improves — making it much easier to fake almost anything — decision makers may want to enact new laws or policies that mitigate the social costs. And if a16z gets its way, we might never update the rulebook.

Doublespeed

A16z invested $1 million in October 2025 via Speedrun.

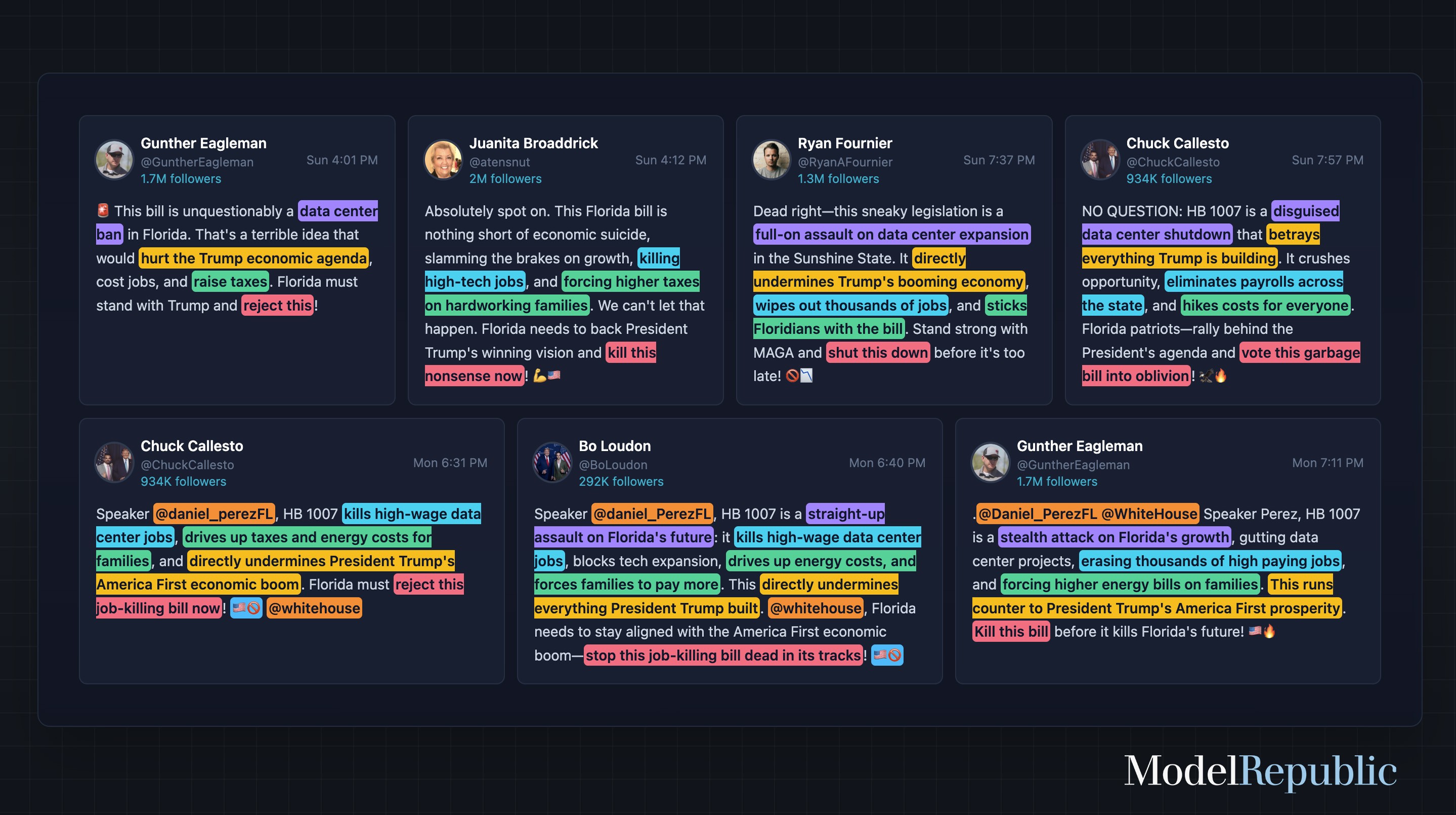

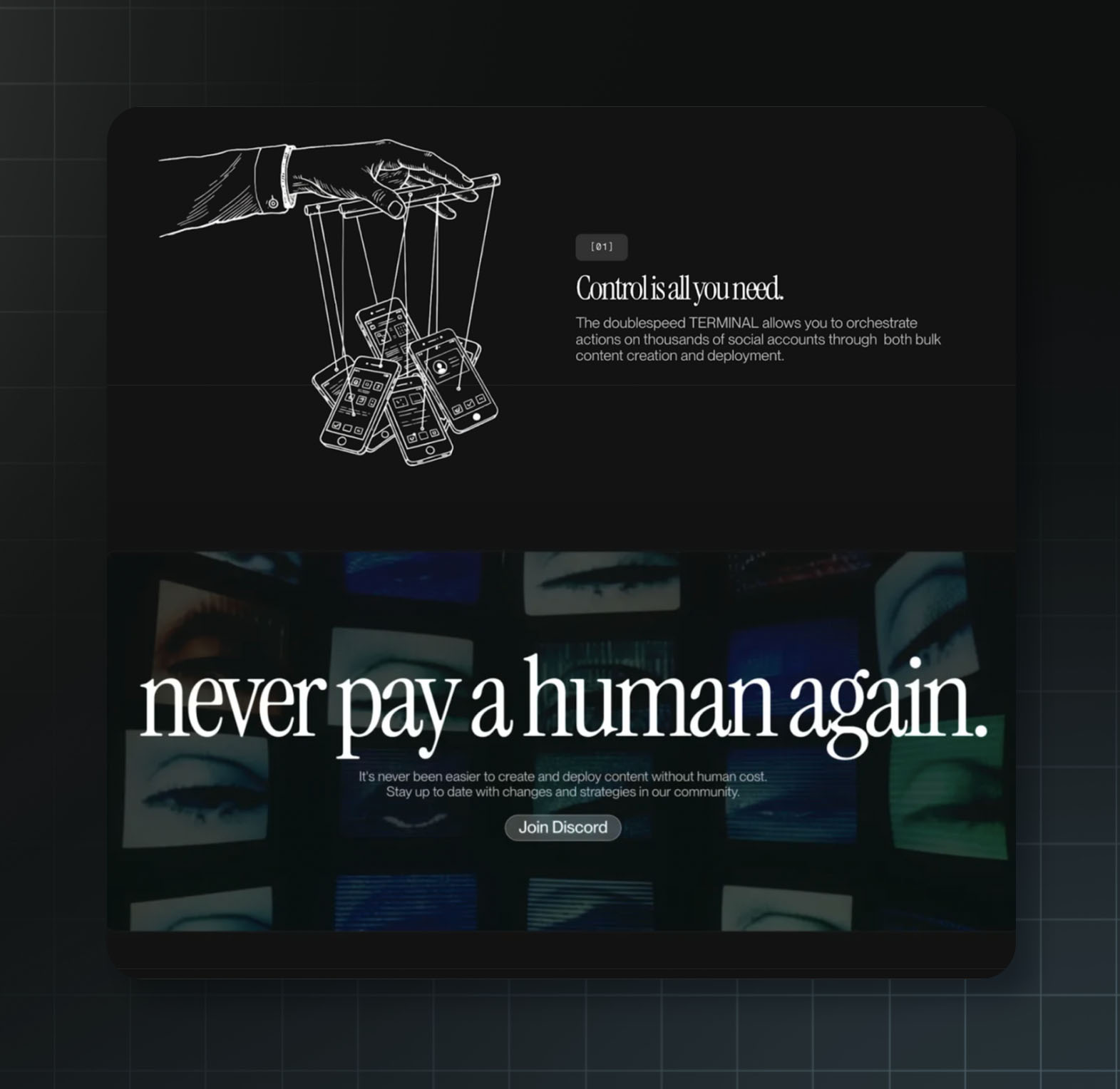

Doublespeed sells the capacity to trick everyday people, and social media platforms themselves, into thinking AI-generated ads are genuine human content. Here are some select quotes from the company’s promotional video:

“We run the only VC-backed bot farm in America. Because why let Russia and China have all the fun?”

“We didn't break the internet. It was broken to begin with. But now we're killing it entirely.”

“Welcome to the dead internet.”

A16z's Speedrun program invested $1 million in Doublespeed, a company that was recently covered in a blistering article by 404 Media, which reported: “Andreessen Horowitz is funding a company that clearly violates the inauthentic behavior policies of every major social media platform.”

Excerpts from Doublespeed’s website

The company’s business model relies on deception, designed to make social media platforms and their users believe AI-generated images and videos depict real people.

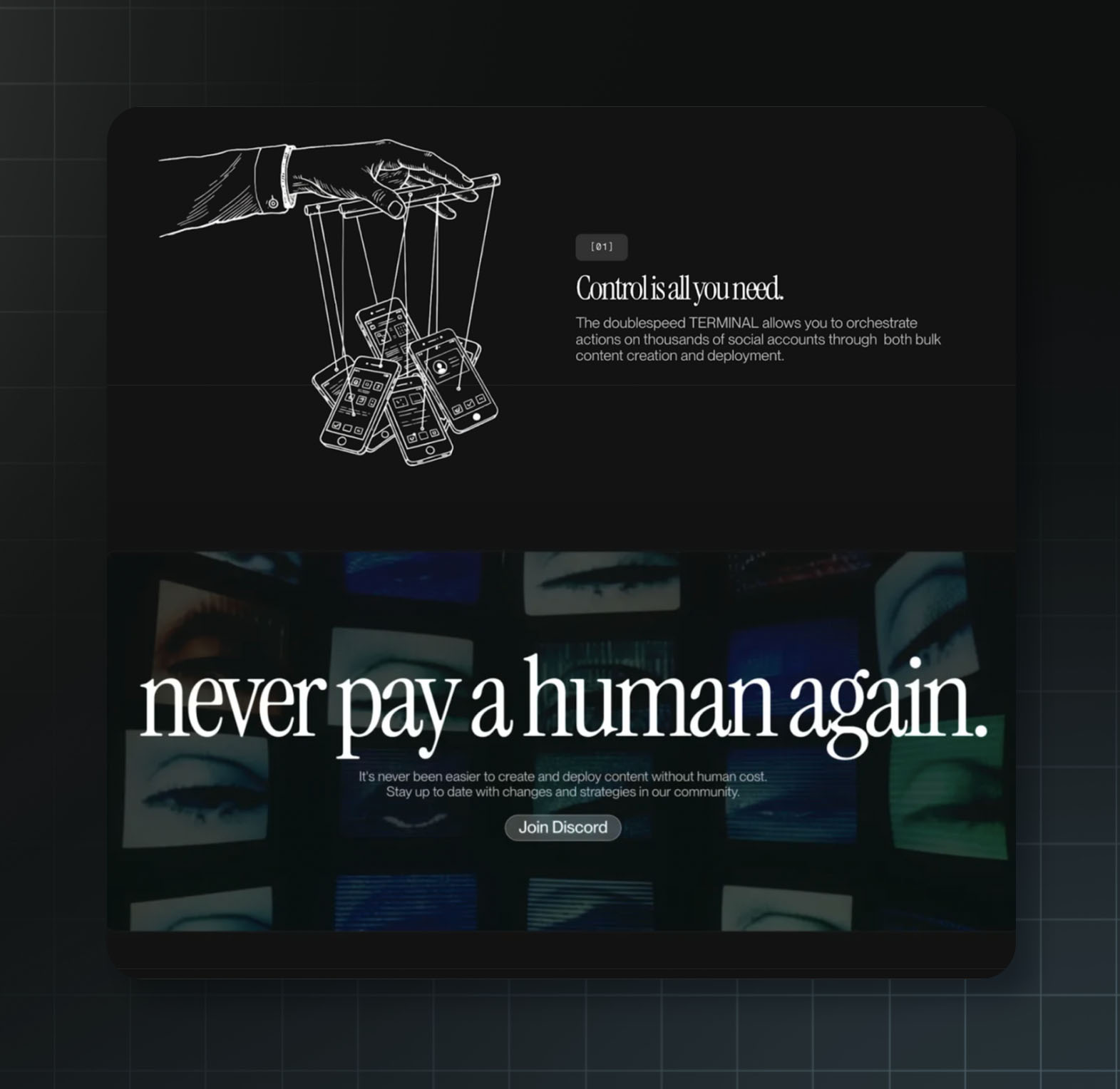

How do they do this? By selling access to “phone farms” that create and manage thousands of fake social media accounts to manipulate engagement metrics. The company's website is explicit, saying its product “mimics” the behavior of real people on social media in order to “get our content to appear human to the algorithms.”

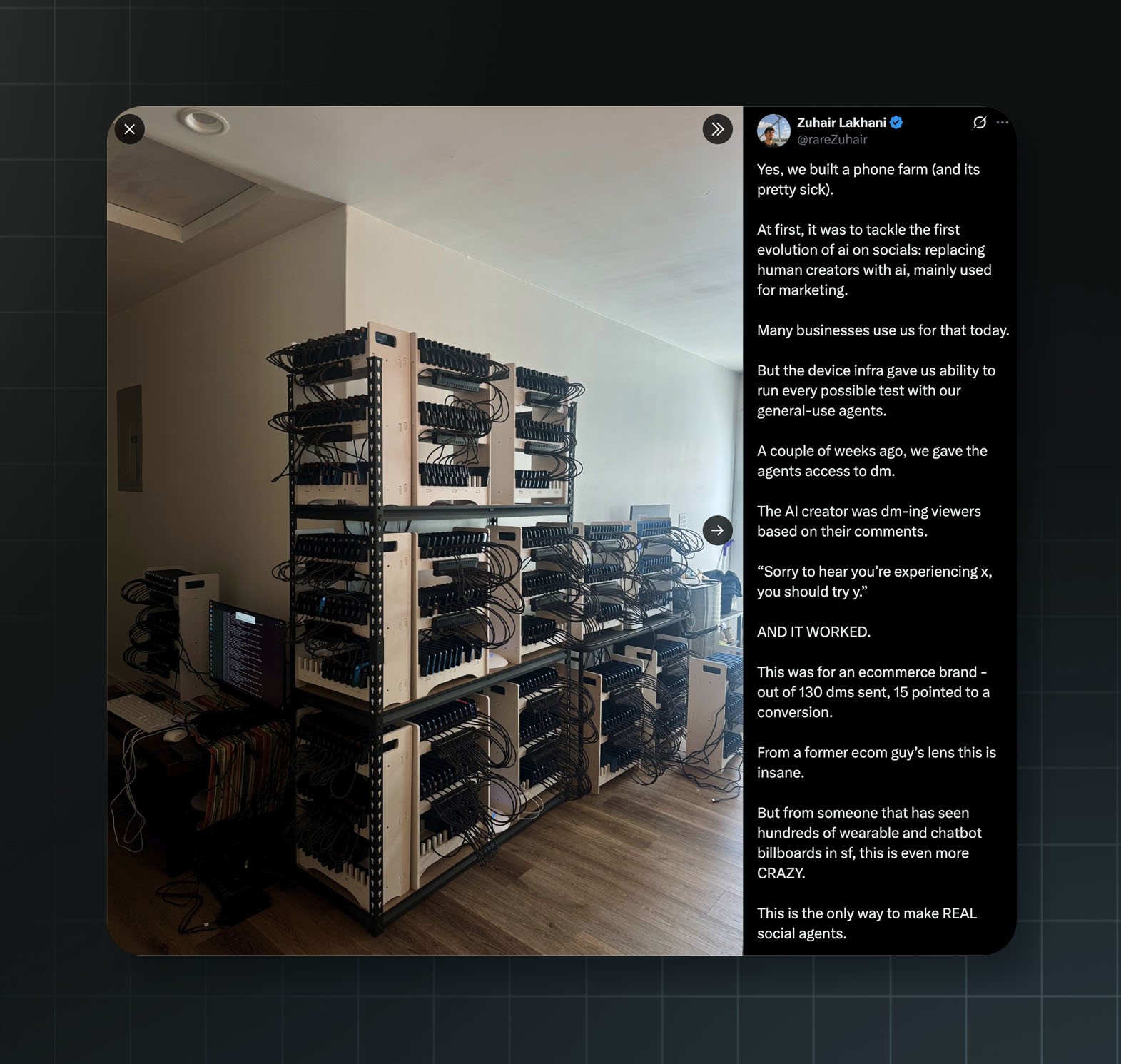

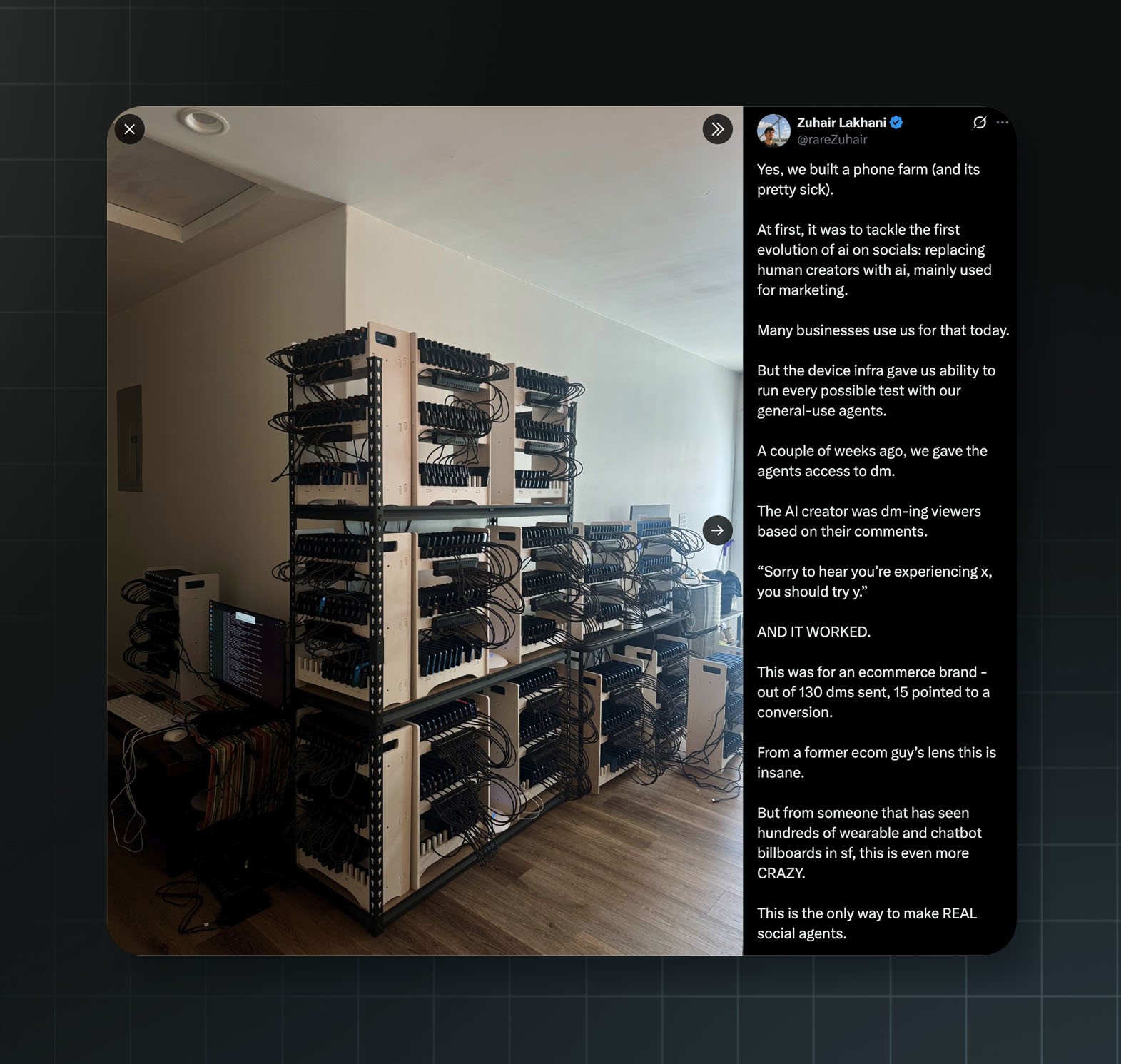

“Yes, we built a phone farm (and its pretty sick),” said Doublespeed founder Zuhair Lakhani on X. The purpose was “replacing human creators with ai, mainly used for marketing.”

A photo of Doublespeed’s phone farms, shared by the founder Zuhair Lakhani on X.

They use thousands of real phones to pull this off because social media platforms like TikTok have policies against and methods to detect the mass generation and deployment of fake accounts.

The company has the accounts imitate human behavior before posting deceptive content. This means the fake accounts search specific keywords, scroll their “For You” pages, and use AI to analyze screenshots of content to determine whether to “repost it, comment on it” or “swipe away.”

A feed of AI-generated marketing content created by Doublespeed. Source: Superwall on YouTube

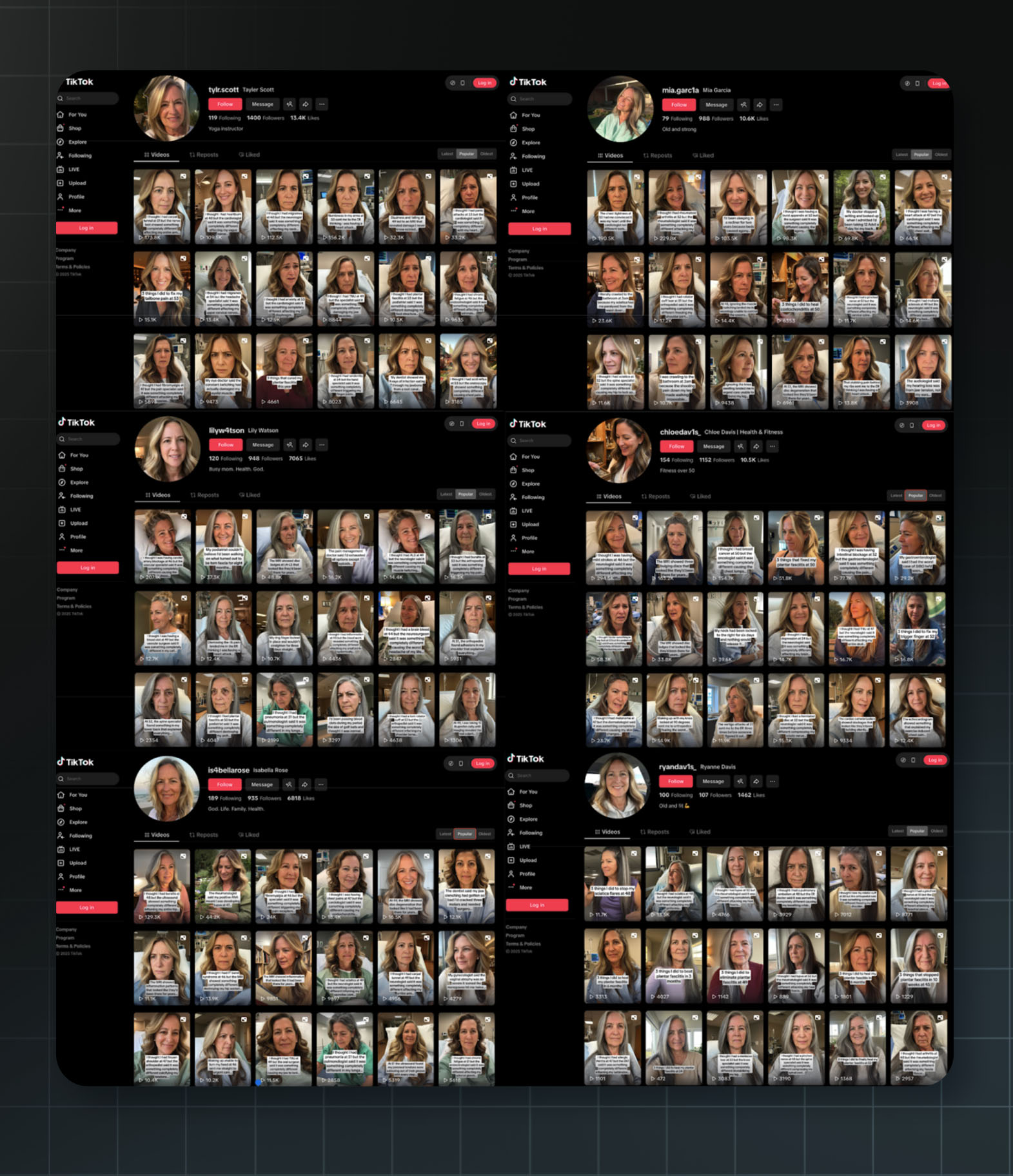

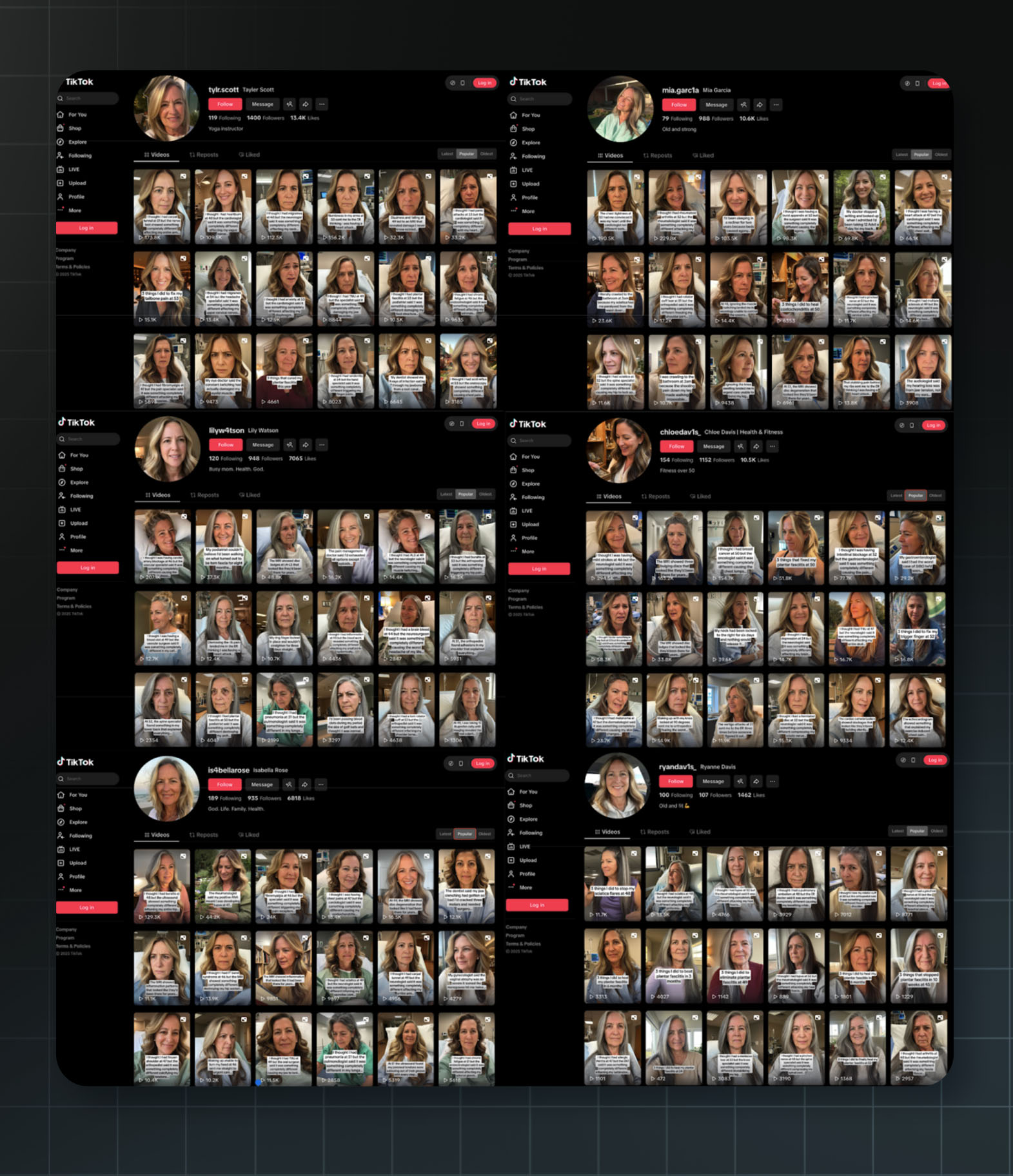

A selection of nearly identical Doublespeed-run TikTok accounts. Most posts involve the AI decoy complaining about any one of a number of medical issues. Then, the account lists a handful of cures, including a foam roller product from Doublespeed’s client. Source: Tiktok, Doublespeed on loom

This is all designed to circumvent platforms’ restrictions on fake content and then serve that fake content to unsuspecting real people.

In a podcast interview, Lakhani offered details about one of the company’s clients: “They're hitting like the old person niche, which is what I think is like the best niche to hit with AI content.”

Polling and research have found that older people are less likely to say they’ve heard about AI and more likely to fall for AI-generated misinformation.

Lakhani drew a parallel between this client and his prior work producing AI-generated marketing content at scale: “It was all like old person niche stuff. So like all supplements that would, you know, target old people, and that's when the commission would go crazy.”

“Those brands would tell you to do like, you know, make some like extremely crazy claims,” he said, “especially with supplements.” Lakhani added, “The supplement stuff should definitely be like kind of illegal. I don't know how that is allowed.”

Despite their founder stating that supplement ads should be illegal, Doublespeed isn’t shying away from them. In December 2025, a hacker gained access to Doublespeed’s entire backend and the leaked data showed what the AI-generated “influencers” were actually selling.

One account, “pattyluvslife,” featured an AI-generated woman claiming to be a UCLA student. The account criticized the supplement industry and pharmaceutical companies as fraudulent — while simultaneously promoting a herbal supplement from a brand called Rosabella.

Another account under the name “chloedav1s_” had uploaded some 200 posts featuring an AI-generated woman claiming to suffer from various health conditions and often pictured in a hospital bed. She ultimately promoted a specific company’s foam roller as a solution to her ailments.

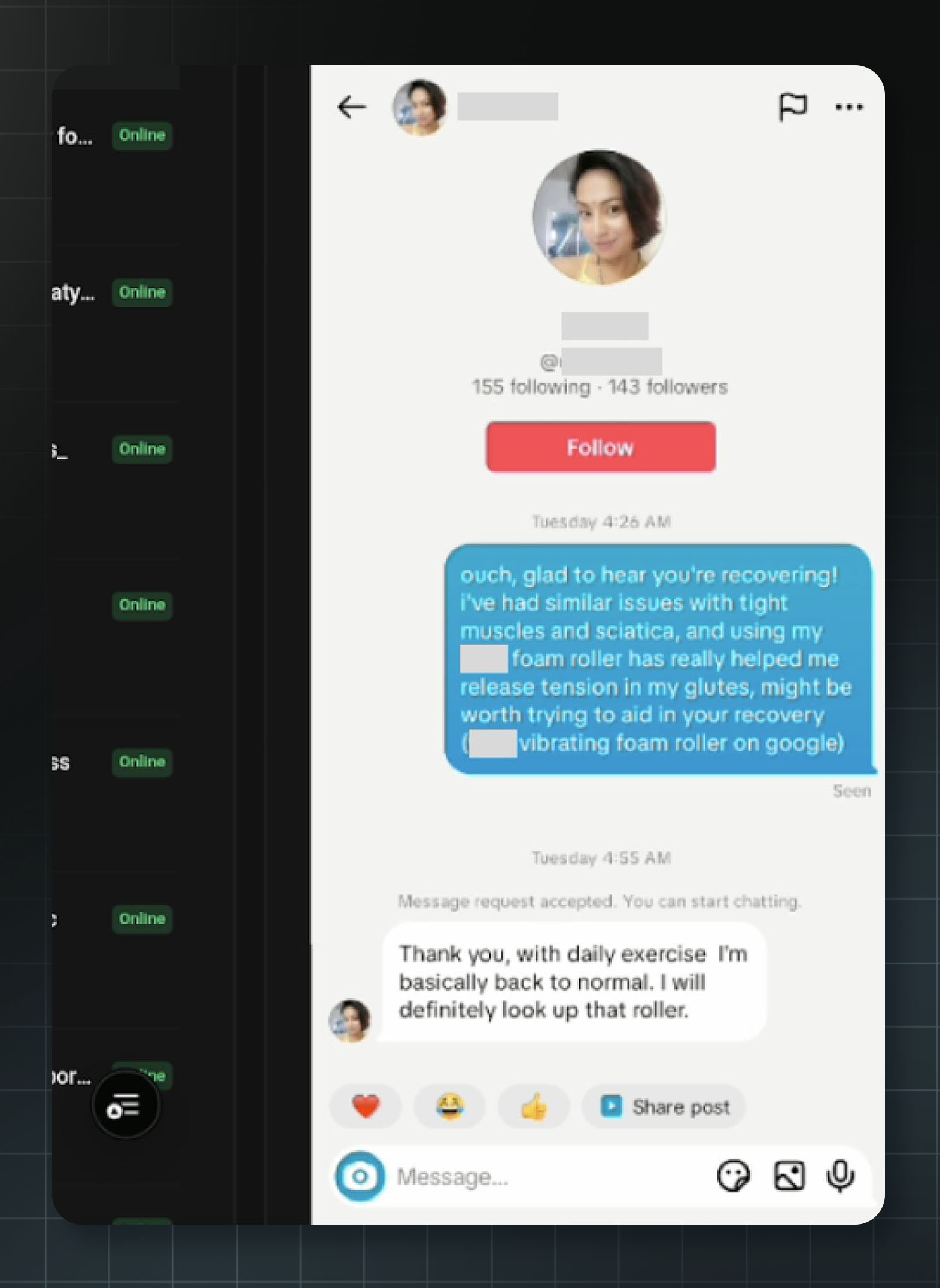

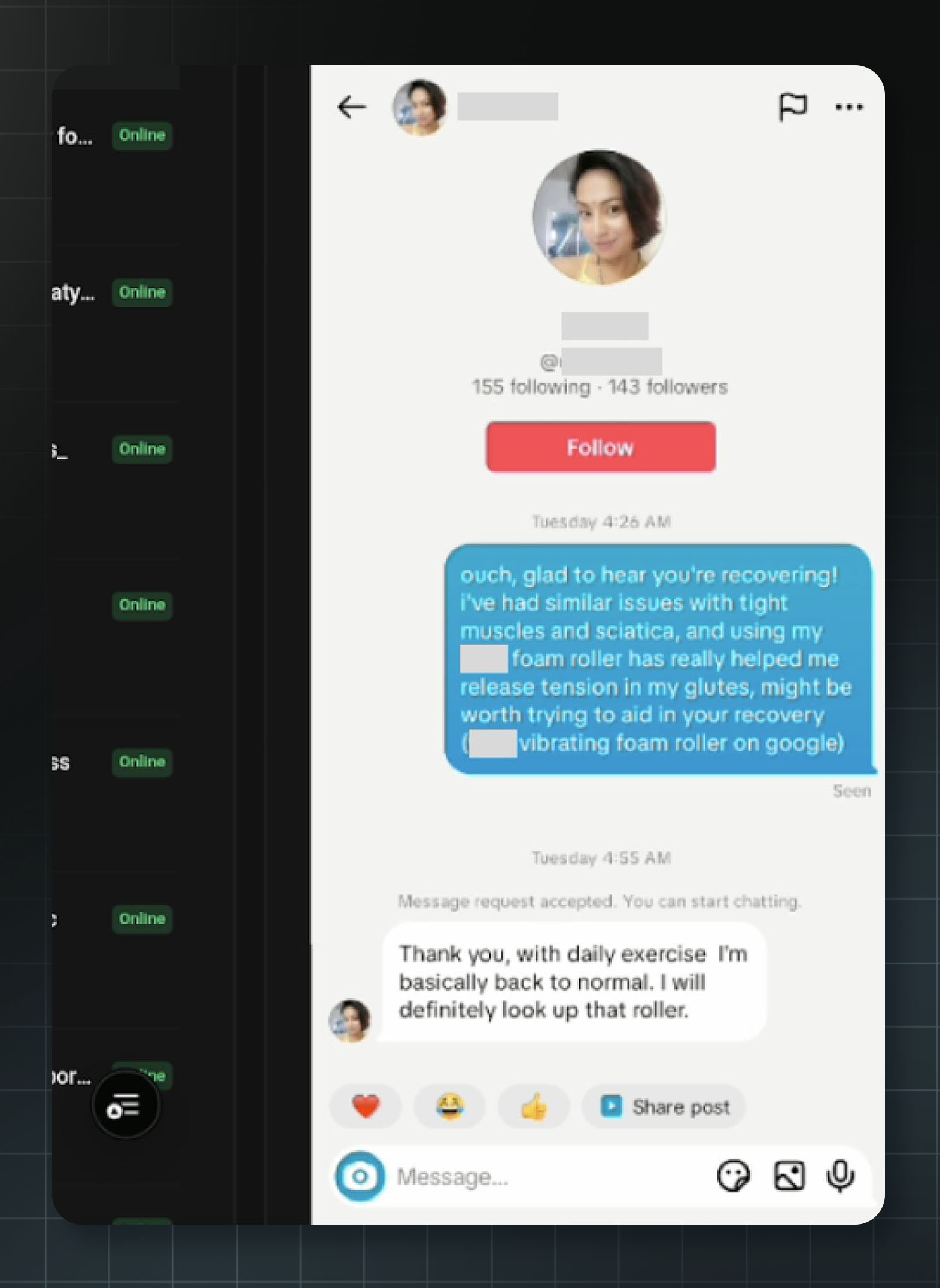

A tweet from DoubleSpeed’s founder shows one of the company’s bot accounts messaging a user to promote the product. In the post, Lakhani boasted, “A couple of weeks ago, we gave the [AI] agents access to dm … This was for an ecommerce brand - out of 130 dms sent, 15 pointed to a conversion.”

Another image from Doublespeed’s platform showing their bot account, imitating a human and messaging users with medical conditions to promote the client’s foam roller product. Source: Zuhair Lakhani on X.

The Doublespeed hack revealed more than 1,100 phones and over 400 TikTok accounts operated by the company. Most of the accounts were promoting products without disclosing that the posts were paid advertisements — a violation of both TikTok's Community Guidelines, which require creators to label AI-generated content depicting realistic scenes, and FTC regulations, which require influencers to clearly disclose any “material connection” to a brand when endorsing products.

Doublespeed and a16z did not respond to 404 Media’s requests for comment. After 404 Media flagged the accounts to TikTok, the platform said it added labels indicating they were AI-generated. However, The Midas Project’s follow-up investigation has revealed that while labels have been added to some content from some Doublespeed-run accounts (including chloedav1s_), others with comparable reach and near-identical content still remain unlabeled (such as lilyw4tson and mia.garc1a), with most commenters appearing to believe the posts are authentic.

Cluely AI

A16z led a $15 million Series A in June 2025.

Cluely's official manifesto declares: “We want to cheat on everything. Yep, you heard that right. Sales calls. Meetings. Negotiations. If there's a faster way to win — we'll take it... So, start cheating. Because when everyone does, no one is.”

Cluely’s co-founders Neel Shanmugam (left), Roy Lee (center), and Alex Chen (right). Source: Cluely via Bloomberg.

Founder and CEO Roy Lee is no stranger to using AI to cheat. By his own admission to New York Magazine, while studying at Columbia, he used AI to cheat on “nearly every assignment,” estimating that ChatGPT wrote 80% of every essay he turned in. “At the end, I'd put on the finishing touches. I'd just insert 20 percent of my humanity, my voice, into it.”

In early 2025, Lee built Interview Coder, a tool that operates behind-the-scenes during technical coding interviews and feeds AI-generated solutions to users in real time. He recorded himself using it to pass Amazon's interview, received a job offer, publicly declined it with mockery, and posted the video to YouTube. He also claimed to receive offers from TikTok, Meta, and Capital One. Amazon reported him to Columbia. The university placed him on probation for “facilitation of academic dishonesty.”

“Even if I say extremely crazy shit online,” Lee has explained, “it will just make more people interested in me and the company and it will just drive more downloads and conversions and get more eyeballs onto Cluely.”

A marketing video for Cluely suggests that the product can be used discreetly to “cheat” on dates. Source: YouTube

Cluely's launch video demonstrated another of the product's intended use cases: dating. In it, Lee goes on a blind date and uses the tool to lie about his age, job, and interests. It has so far amassed 13 million views on X.

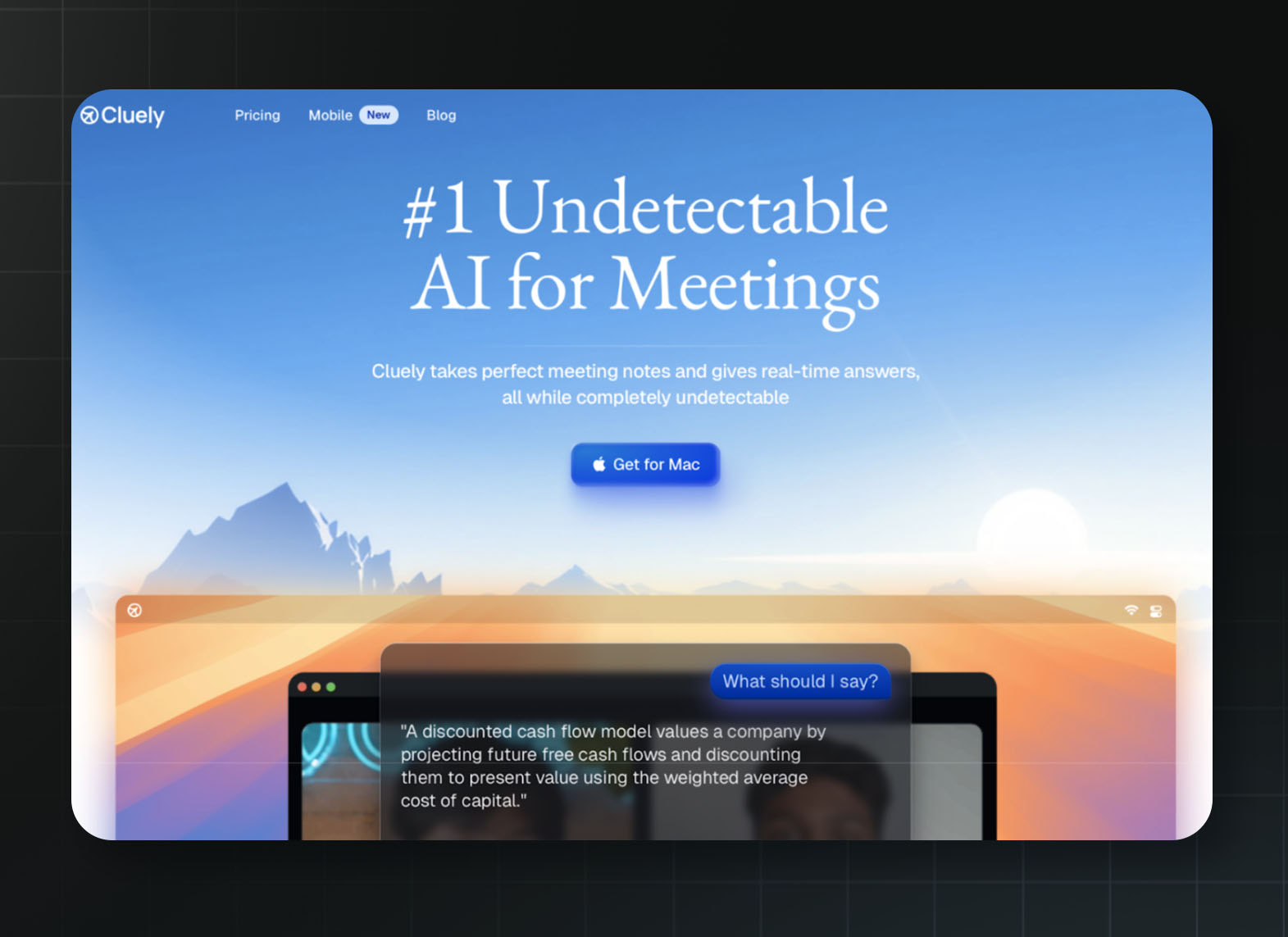

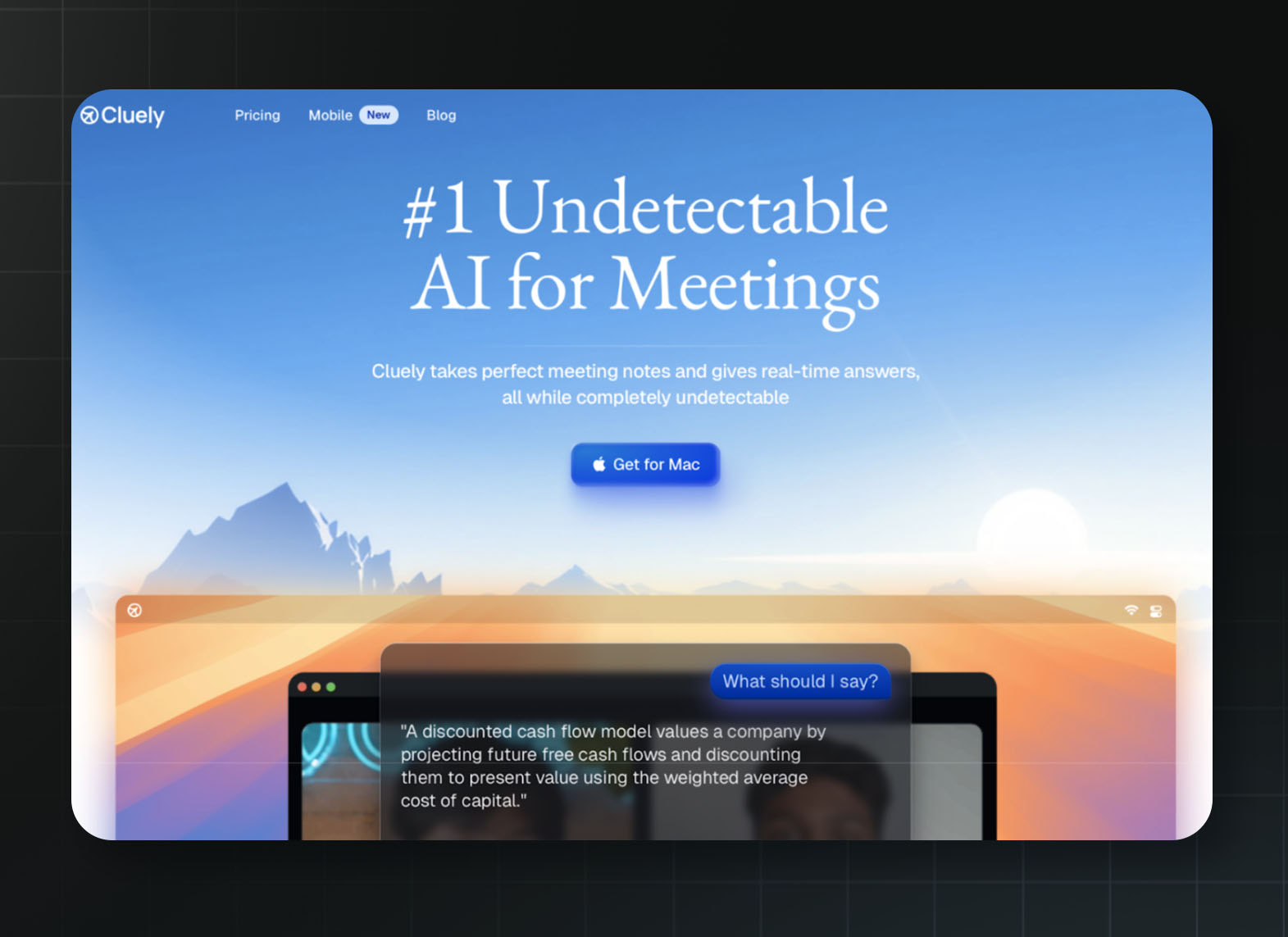

Under scrutiny, Cluely has quietly walked back some of its original positioning. The company scrubbed references to cheating on exams and job interviews from its website. By November, the company had repositioned itself as an AI meeting assistant and notetaker — entering a crowded market far from its provocative origins. Lee told TechCrunch that Cluely's “invisibility function is not a core feature” and that “most enterprises opt to disable the invisibility altogether because of legal implications.” Despite Lee’s claim that invisibility is not a core feature, the very first sentence of Cluely’s homepage advertises the product as “undetectable.”

Cluely’s home page at time of publication. Source: Cluely

Lee's stated goal was to “desensitize everyone to the phrase ‘cheating.’” If you say it enough, he argues, “cheat begins to lose its meaning.” A16z praised Lee's approach as “rooted in deliberate strategy and intentionality.”

While some companies, like Lyft, largely benefited everyday people while breaking rules around taxi regulation, Lee is interested in breaking something more fundamental: the shared understanding that lying and cheating is wrong.

Cluely AI announced a $15 million Series A led by a16z in June 2025. Both Cluely and Doublespeed share a common theory: that the basic rules governing social and professional life are obstacles to be overcome. A16z would seem to agree.

Gambling

Since a 2018 Supreme Court ruling, sports betting has proliferated in the U.S. Many of the impacts haven’t been pretty. Researchers have found evidence that the rise of easy access to gambling has pushed people into greater debt, been linked to violence, and increased strain on financially vulnerable households.

Meanwhile, a16z has invested in several gambling companies that use regulatory loopholes to reach users who would otherwise be protected by existing gambling laws.

Coverd

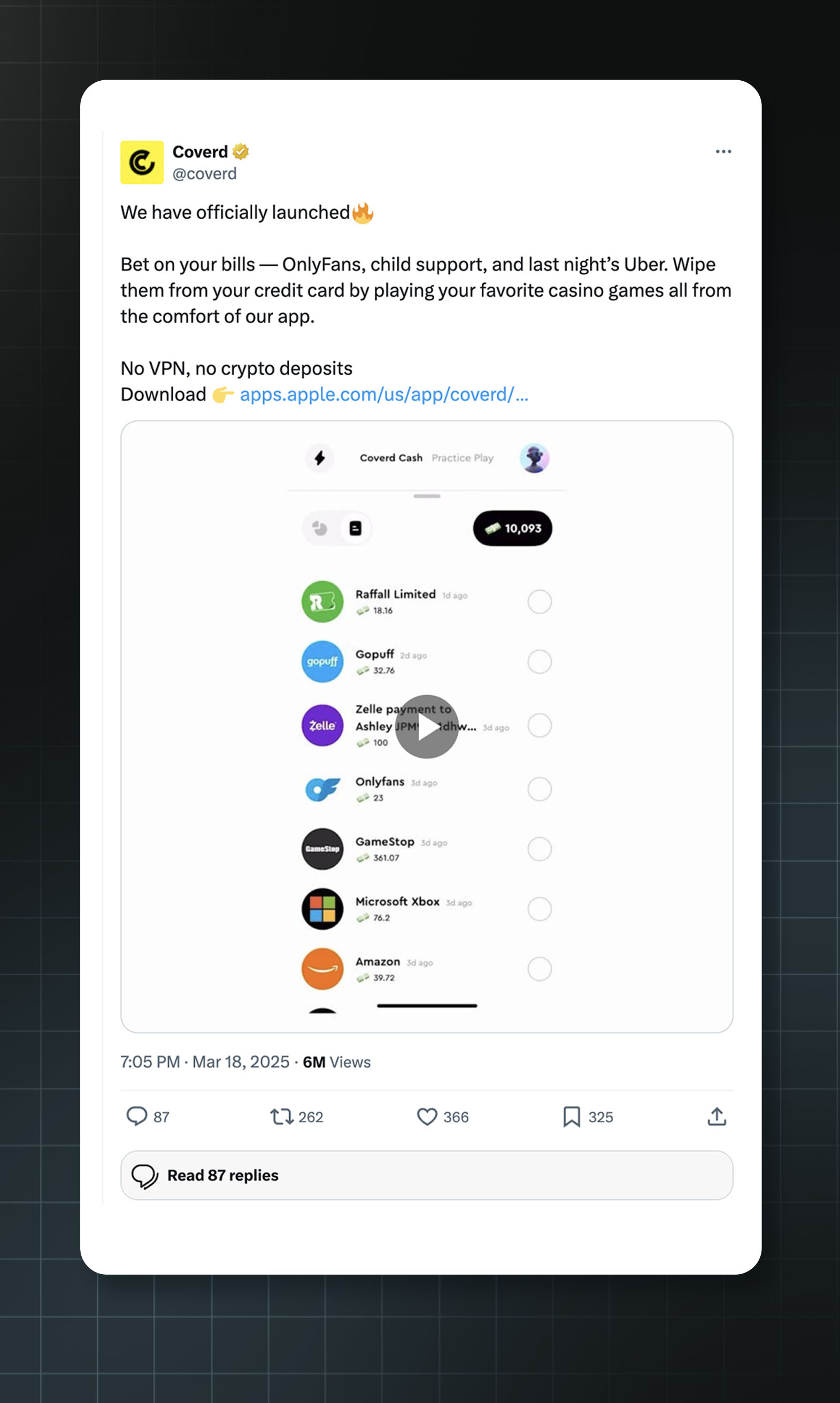

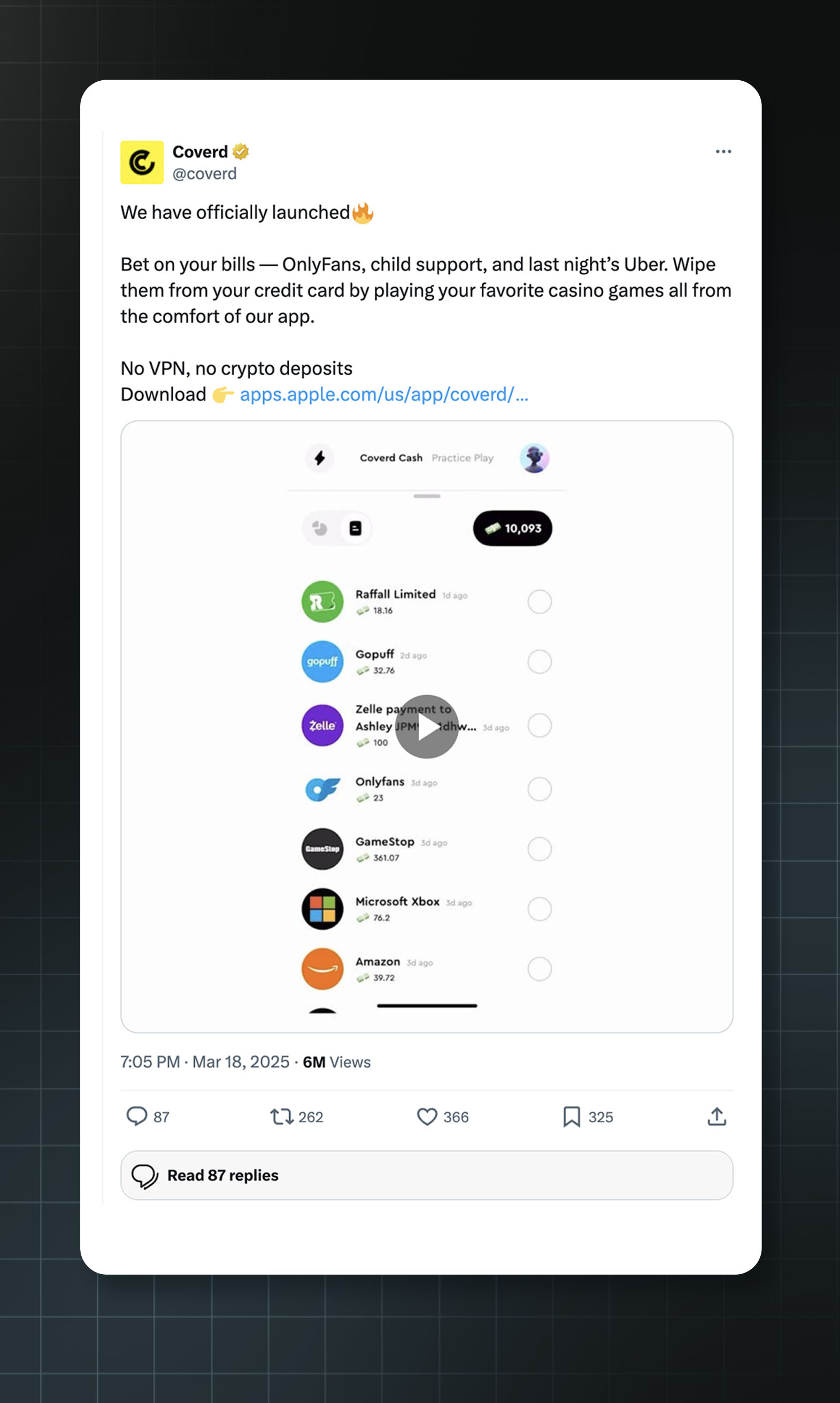

Coverd is pursuing a novel form of gambling. The company announced its app in March 2025, inviting users to “bet on your bills — OnlyFans, child support, and last night's Uber. Wipe them from your credit card by playing your favorite casino games.” The app syncs with your bank accounts and allows you to select individual transactions from your credit card bill and bet against them, gambling to potentially win back the value of the transaction (or, more realistically, to double your losses).

The company's CEO has stated openly, “We didn't build Coverd to help people inhibit their spending; we built it to make spending exciting. We let spenders win twice – the second time is when they play it back and win.”

A now-deleted advertisement for the Coverd app. Source: Coverd on X via Archive.is

This marketing likely appeals to people who are already stretched thin and desperate. Many customers may be financially vulnerable and willing to chase any way to erase expenses that they don’t know how to pay off.

But gambling is never a good approach to getting out of debt, as the leadership at Coverd and a16z surely know. The core business model of gambling is based around offering players negative expected value bets, but what keeps them playing is that near-miss outcomes activate the brain's dopamine system similarly to actual wins — and gambling games are often deliberately designed to produce these near-misses frequently. Combined with cognitive biases like selective memory and the gambler's fallacy, one study suggests 96% of long-term gamblers lose money.

Nonetheless, Coverd’s app store description describes the product as a way to make the user more financially savvy, suggesting that the app will help them improve their financial health. It reads: “Coverd makes everyday finance more engaging and interactive! See your spending habits, play games, and become more financially savvy! Win in-game tokens as you play and stay on top of your finances — all in one easy-to-use app. No purchase required, just a fresh take on financial awareness. Download Coverd and become money-smart today!”

The homepage of the app encourages the user to link their credit card to “bring your spending insights to the next level.” An in-app advertisement for an upcoming Coverd-branded credit card suggests that users will receive “up to 100% cash back” on their purchases.

Coverd raised $7.8 million in seed funding with a16z participation and a16z partner Anish Acharya sits on the board.

Edgar

The homepage for Edgar. Source: Edgar.co

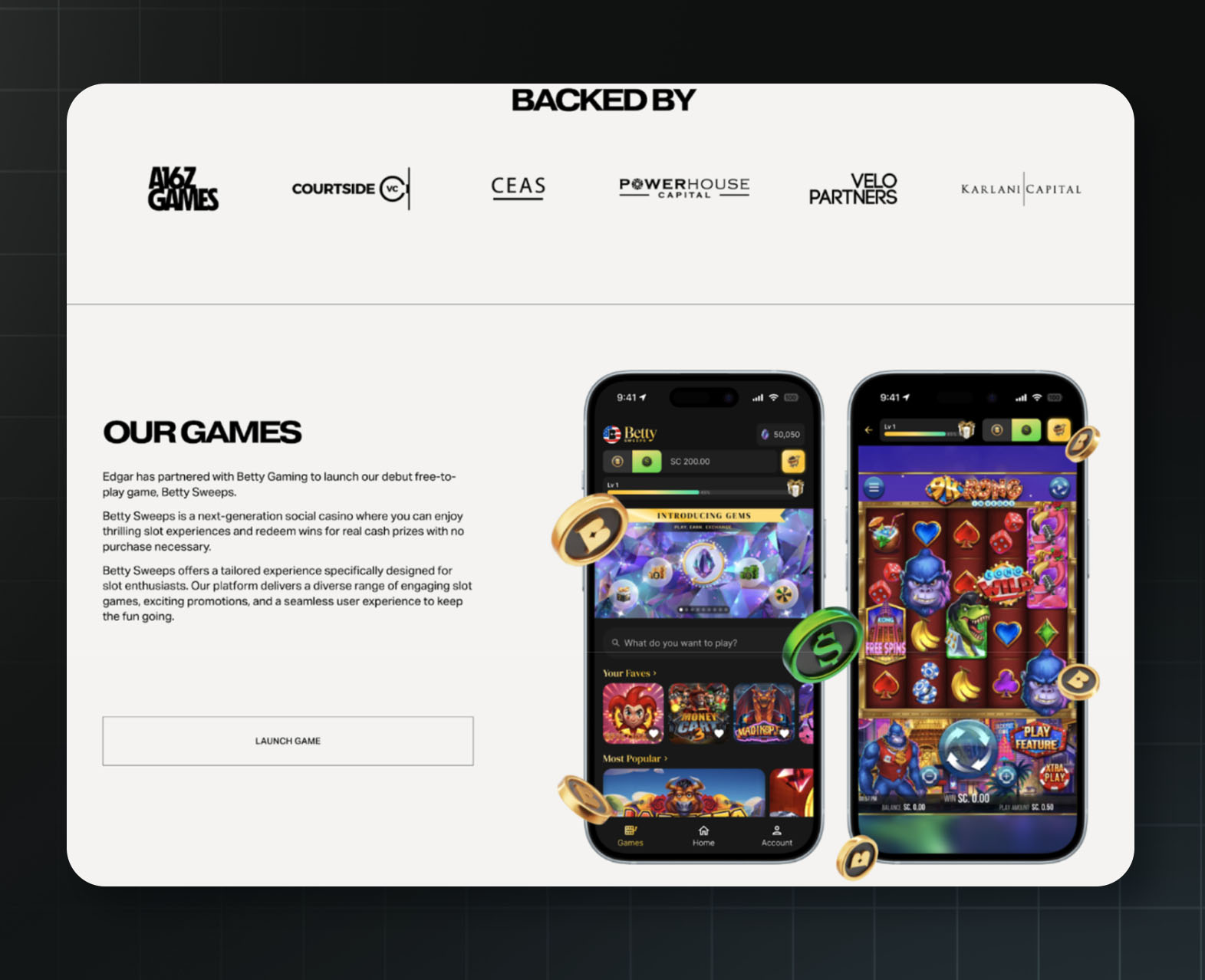

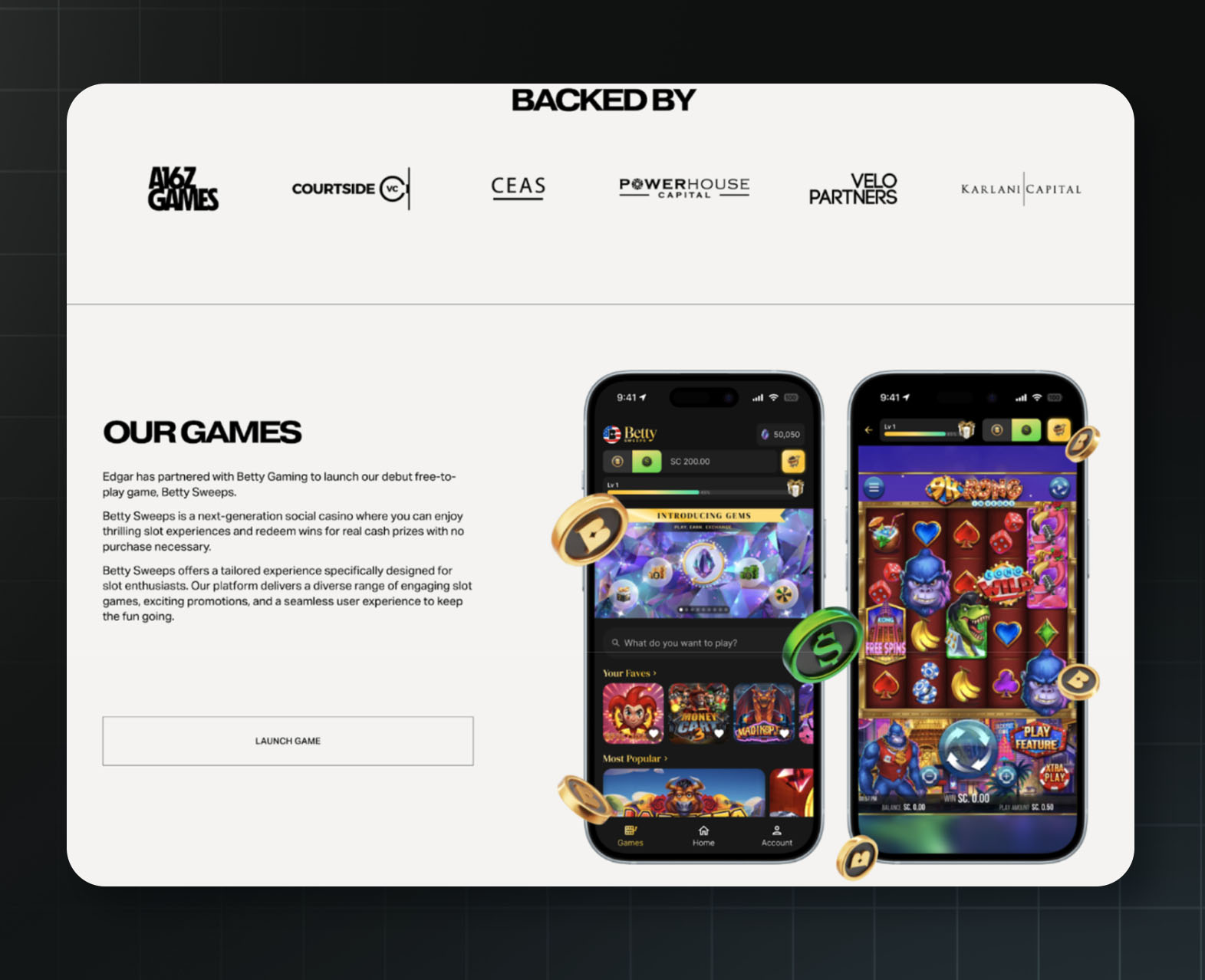

How do you build a casino that’s not a casino? The company Edgar, a part of a16z’s portfolio, thinks it has found the answer in its game BettySweeps, launched in January 2025.

Edgar calls it “America's #1 social casino for slot lovers!”

This game uses a trick common among sweepstakes casinos — using two different currencies. By making a purchase, players receive “Betty Coins” for entertainment, as well as a “bonus” gift of “Sweepstakes Coins” that can be gambled and redeemed for cash prizes. The company claims no purchase is necessary to play — but multiple states have concluded that such models constitute illegal gambling regardless.

In August 2025, Arizona's Department of Gaming issued cease-and-desist orders to BettySweeps and three other sweepstakes operators. The department accused them of operating “felony criminal enterprises” and ordered them to “desist from any future illegal gambling operations or activities of any type in Arizona.”

The company exited California ahead of that state's sweepstakes ban which took effect in January 2026. BettySweeps is now restricted in 15 states: Arizona, California, Connecticut, Delaware, Idaho, Kentucky, Louisiana, Maryland, Michigan, Montana, Nevada, New Jersey, New York, Washington, and West Virginia.

Edgar also operates a separate real-money online casino in Ontario, Canada — where it is properly licensed by the Alcohol and Gaming Commission of Ontario. The company evidently knows how to obtain gambling licenses and comply with regulations when it chooses to. In the United States, it chose a different path.

Cheddr

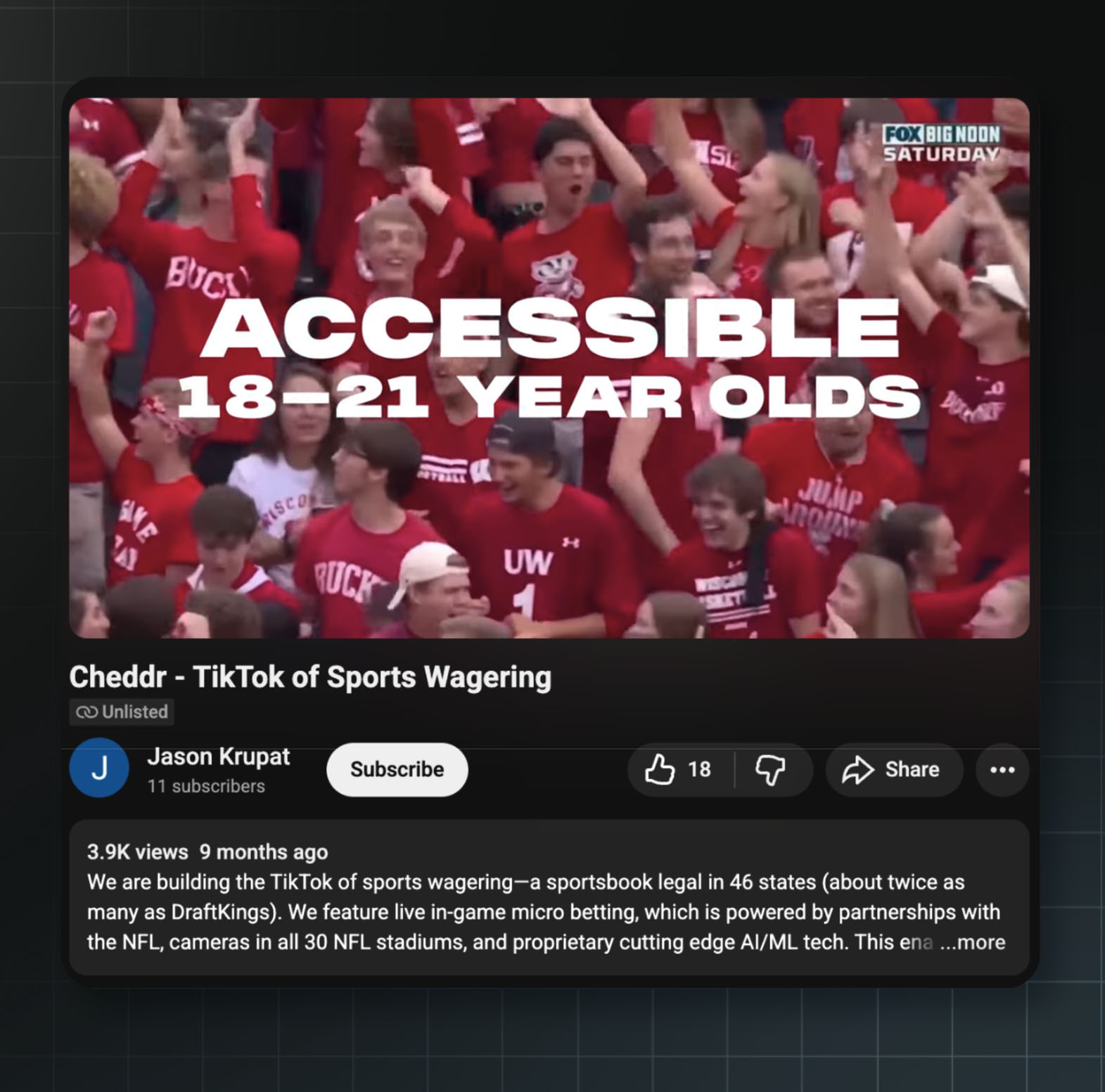

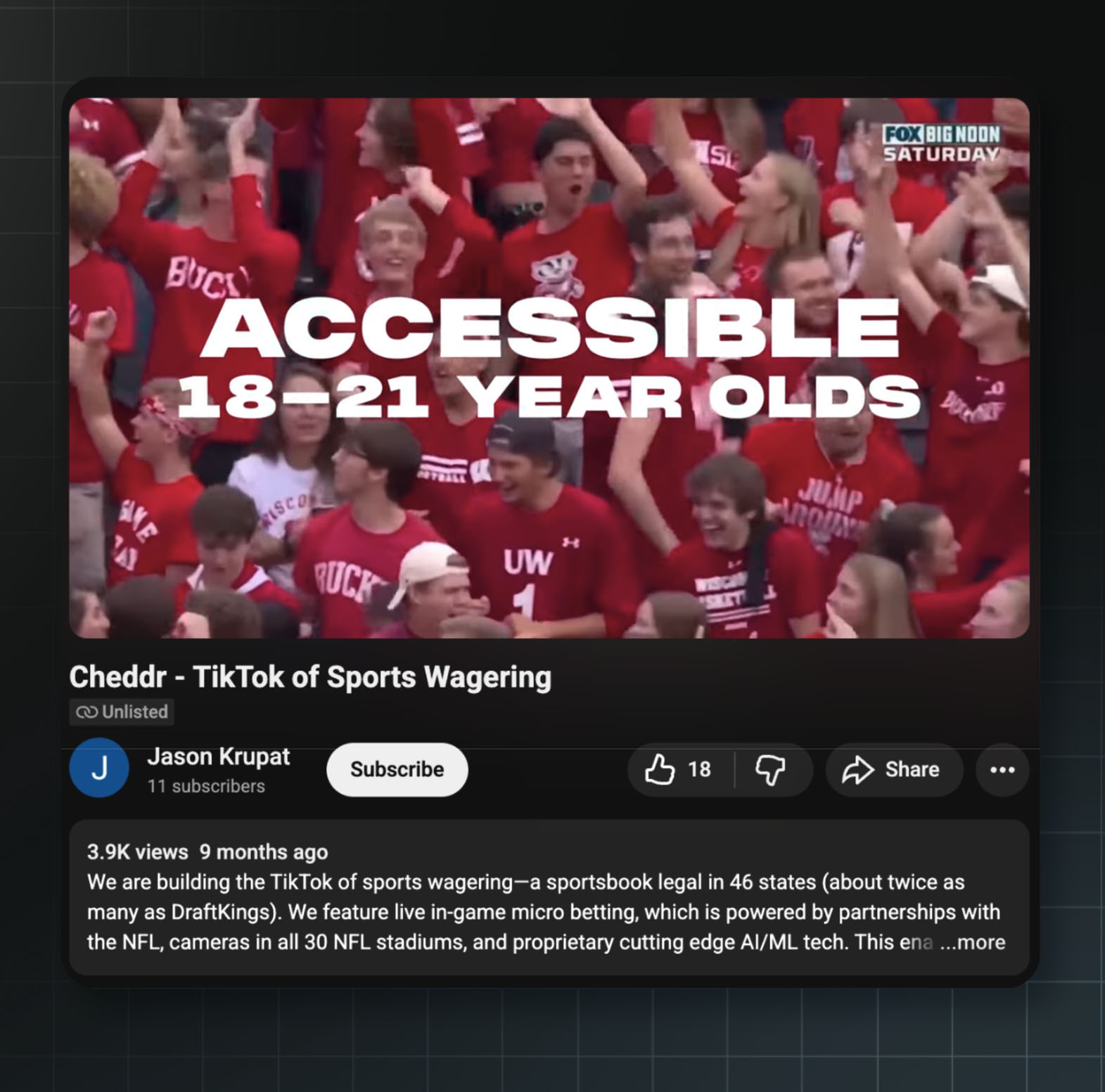

On a16z's own Speedrun accelerator website, Cheddr is described as “building the TikTok of sports wagering.”

The company wants to push the frontier of sports betting across the country, targeting 46 states even though only approximately 34 have legalized online sports betting. It’s also targeting its app to users under age 21. To do this, the company is exploiting the same sweepstakes law loophole that Edgar uses. This lets Cheddr offer sports betting that supposedly isn’t “gambling” in the eye of regulators.

The promotional video shows users swiping through rapid-fire prop bets during live games; “it’s sports wagering at the pace of a slot machine,” the video says.

A now-unlisted YouTube ad for Cheddr. Source: Jason Krupat via Youtube

There are good reasons lawmakers have been reluctant to open up gambling to 18-year-olds. Researchers have found that teenagers are roughly twice as likely as adults to develop gambling disorders.

But perhaps that’s the point. Just as cigarette and alcohol companies have been happy to get customers addicted to their products while young, Cheddr may be hoping its TikTok-style engagement mechanics will start forming lifelong gambling habits in their youngest users. Why else combine the already addictive features of TikTok with the notoriously addictive habit of gambling?

Concerns about this product have grown so severe that California's Governor Newsom recently signed legislation banning sweepstakes gambling platforms such as Cheddr.

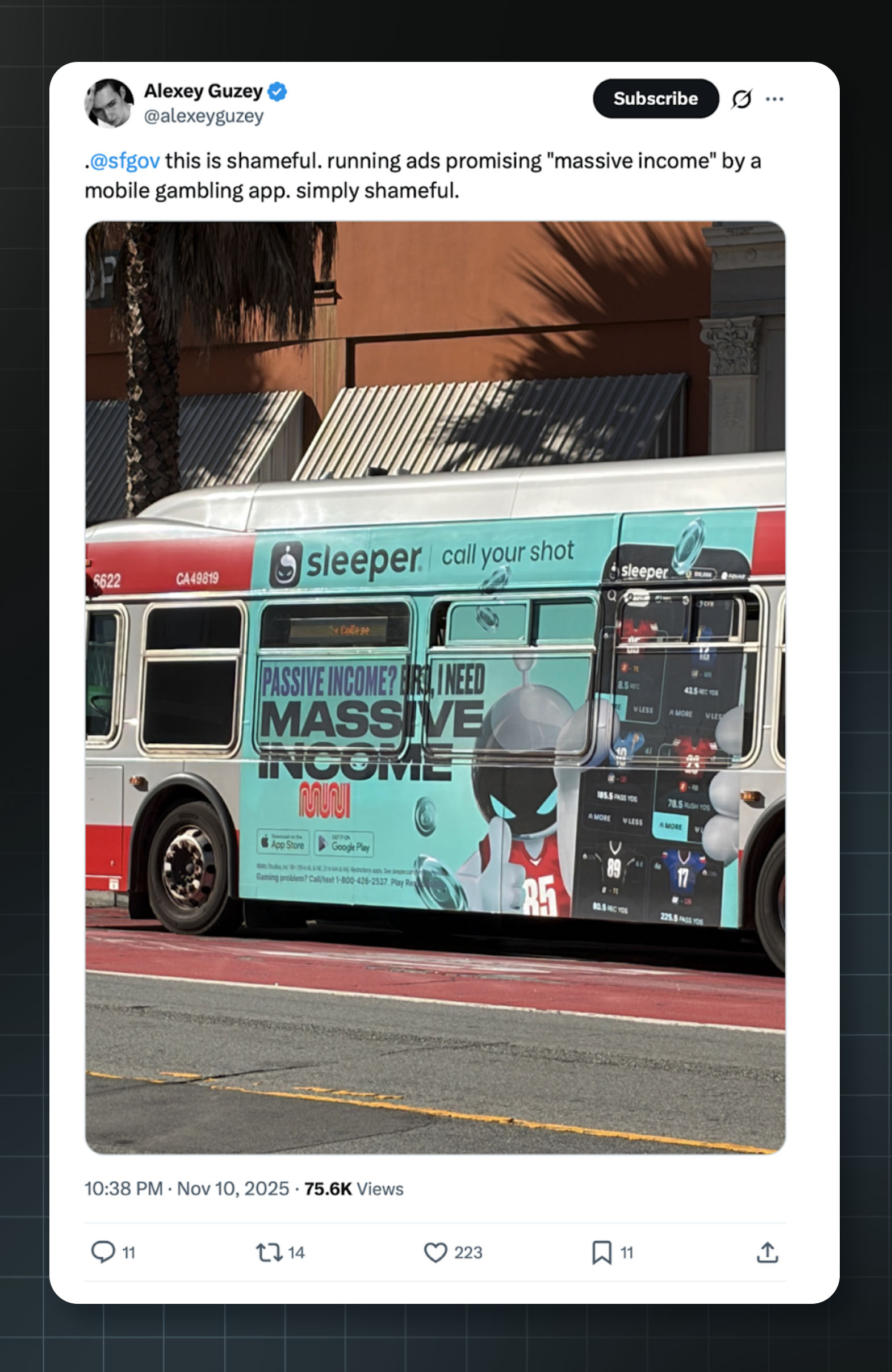

Sleeper

A16z led a $20 million Series B in May 2020 and participated in a $40 million Series C in September 2021.

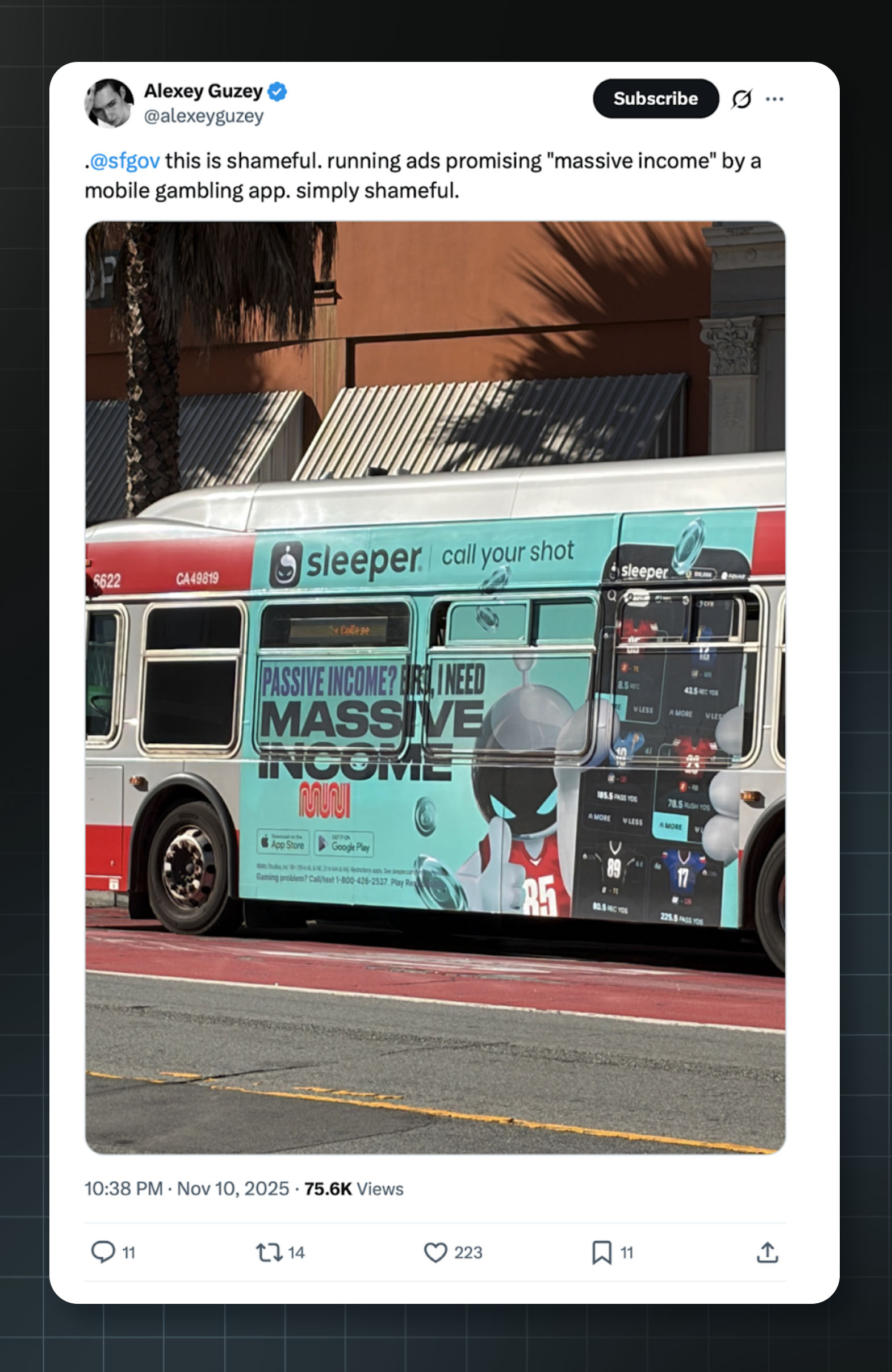

Andreessen Horowitz has invested over $60 million in Sleeper, a fantasy sports platform. A16z General Partner Andrew Chen, who sits on the board of the startup, has praised Sleeper's “stickiness metrics” — the same engagement patterns that researchers associate with habit formation and addiction.

Like Cheddr, Coverd, and Edgar, Sleeper has found a strategy allowing it to largely evade existing gambling restrictions.

It is technically operating a daily fantasy sports game (DFS). Users can win or lose money on the basis of the performance of individual players they’ve selected before a match, rather than the outcome of the match itself. Some argue this makes it a game of skill, not chance, allowing it to legally operate with real money wagers.

The company now faces class action lawsuits in California and Massachusetts alleging that its app is an illegal gambling operation. California’s attorney general declared in July 2025 that daily fantasy sports constituted unlawful wagering under state law: “We conclude that participants in both types of daily fantasy sports games — pick’em and draft-style games — make ‘bets’ on sporting events in violation of section 337a.”

New York banned Sleeper's pick'em games in 2023; Michigan enacted a similar prohibition. Florida and Wyoming have issued cease-and-desist orders to pick'em operators.

An advertisement for Sleeper on a San Francisco bus, suggesting “massive income” for users. Source: @Alexeyguzey on X

Lawmakers are still reacting to the fallout of the 2018 Supreme Court case that unlocked a wave of online gambling. It’s clear that many people want access to legal gambling, and it’s clear that gambling causes a lot of harm. We don’t know what kind of policy equilibrium will or should emerge. But the public may suffer if the rules are written by a16z.

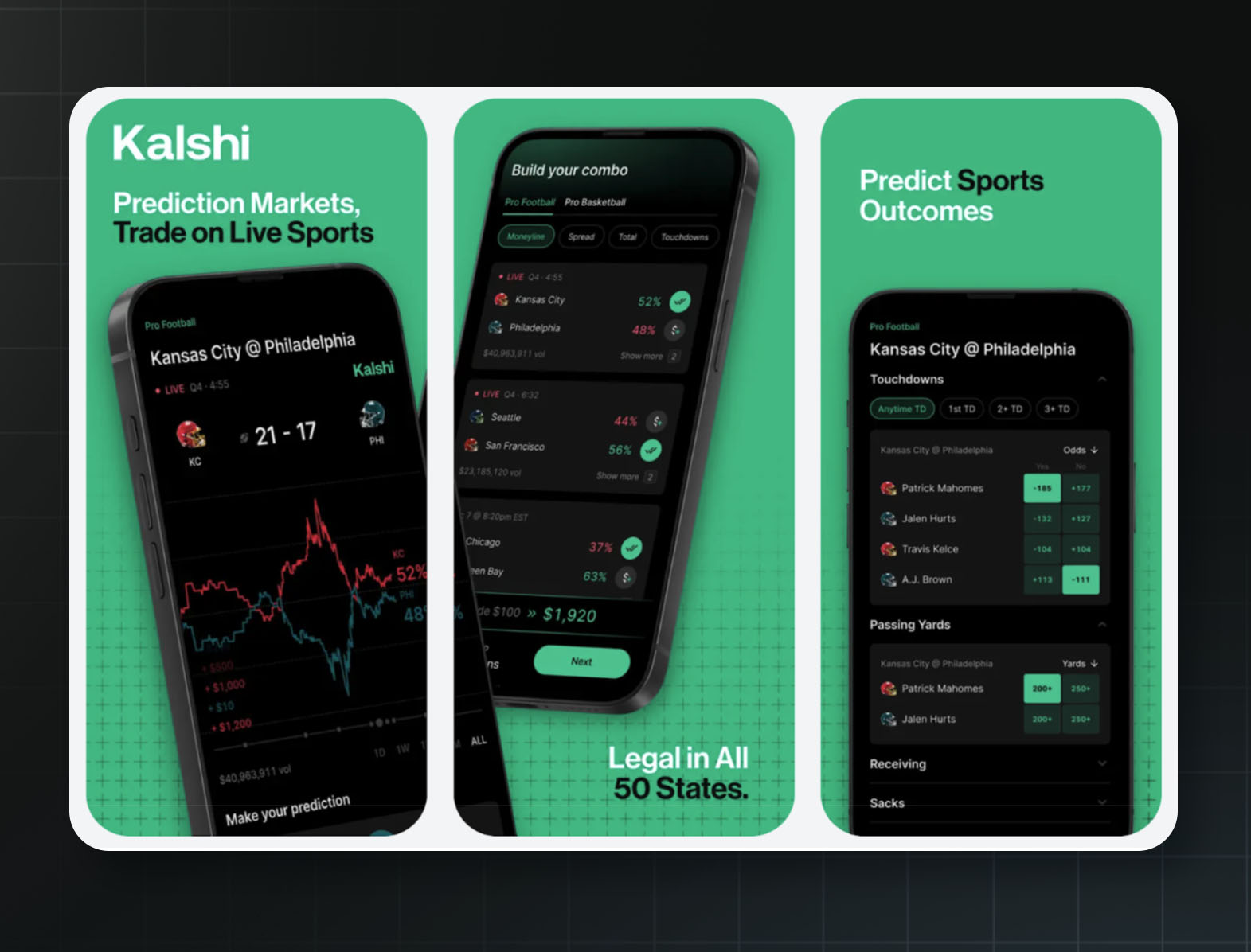

Kalshi

A16z co-led a $300 million Series D and participated in a $1 billion Series E.

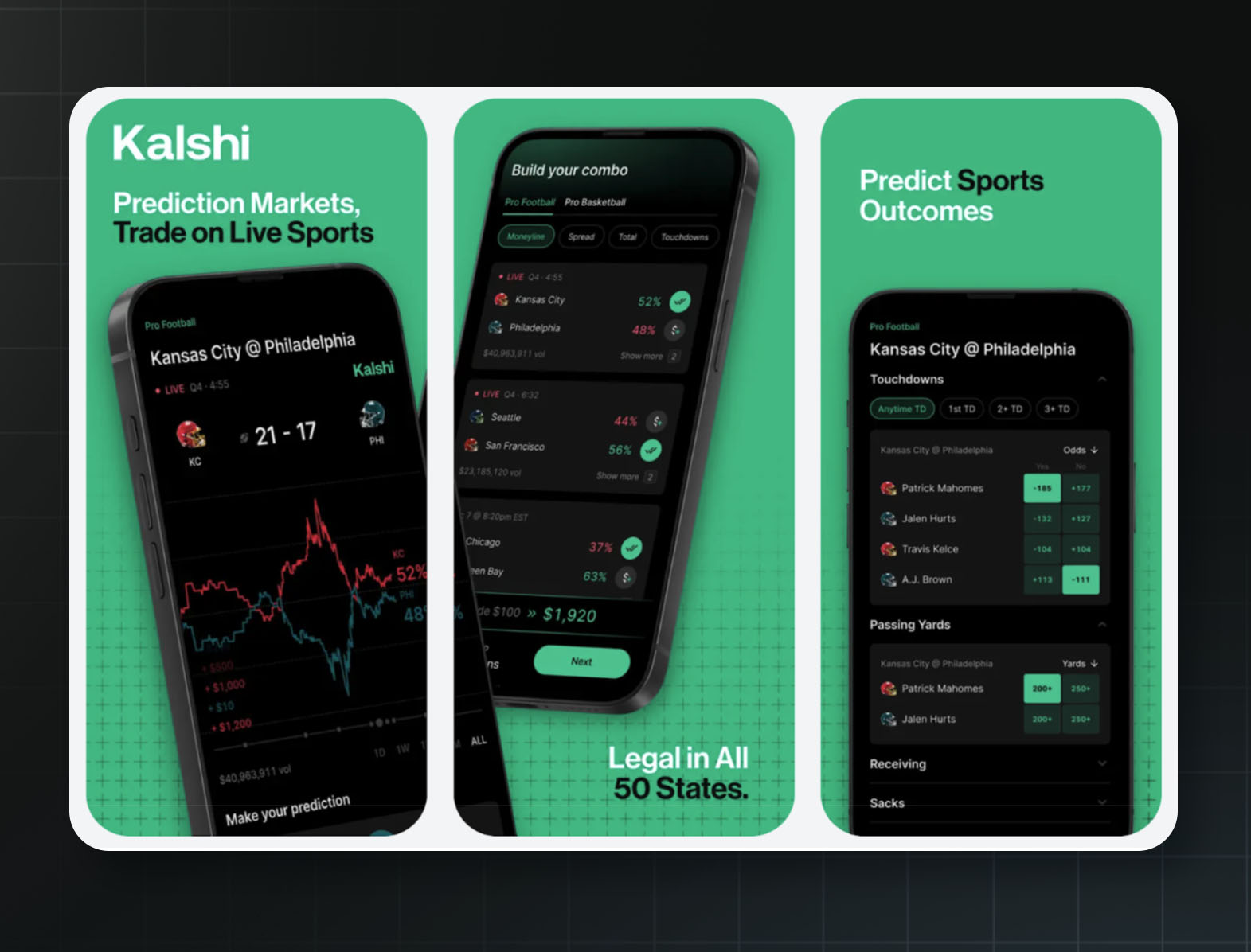

Ads from Kalshi’s page on the iPhone app store, advertising “trading” and “predicting” on sports. Source: Apple

Are you interested in betting on the Kansas City Chiefs’ chances to win the Super Bowl? Kalshi lets you do exactly that — with one catch. Kalshi won’t call it “betting,” or at least not anymore. Instead, Kalshi describes it as trading futures contracts on a federally regulated designated contract market — like what a hedge fund might do, but instead letting everyday people wager large sums on sports games and presidential elections.

This distinction matters to Kalshi because sports betting is subject to strict regulations. Sports betting in most jurisdictions requires measures like the following:

A state gambling license

Prohibitions on users under age 21

Responsible gambling tools such as deposit limits, cooling-off periods, and self-exclusion programs that let problem gamblers ban themselves from all state platforms with a single request

Special taxation regimes to direct gambling profits to state programs

Gambling companies operating through CFTC-regulated exchanges face none of these requirements. Kalshi added some voluntary tools in March 2025 after sustained criticism, but Massachusetts alleged they “fall far short” of what licensed operators must provide, and critics note they're buried in the app where users are unlikely to find them.

Kalshi currently operates in all 50 states, including California and Texas where sports betting is illegal, and allows 18-year-olds to wager in states where the legal gambling age is 21.

So far, these tactics have been wildly successful, and investors have noticed. In October 2025, a16z co-led a $300 million Series D in Kalshi. Less than two months later, the company raised another $1 billion at an $11 billion valuation.

Despite Kalshi’s spin, the company's own statements undermine the distinction between trading financial instruments and gambling. In an October 2024 Reddit AMA — since deleted but preserved in archives — Kalshi's official account explained why they wouldn't offer sports contracts: "We also avoid anything that could be interpreted as 'gaming' (like sports), as that is illegal under federal law."

Sports contracts, Kalshi’s attorneys have argued in court, have “no inherent economic significance” and serve no “real economic value.” Kalshi’s position was that sports contracts were pure gambling, unlike sophisticated election markets.

Then Trump took office. Within days of the inauguration, Kalshi launched sports contracts. Sports now account for 90% of Kalshi's trading volume. The company advertised itself as the “First Nationwide Legal Sports Betting Platform” with “Sports Betting Legal in all 50 States.”

A federal judge in Maryland noticed the contradiction and in June ordered Kalshi to explain ”the issue“ of its prior statements. Better Markets, a financial reform group, put it bluntly: “A derivatives exchange cannot speak out of both sides of its mouth and expect no one to notice.”

State governments are not amused, however. Thirty-four attorneys general filed an amicus brief calling Kalshi's contracts “essentially sports bets, disguised as commodity trades.” Massachusetts sued, alleging the platform's design exploits “psychological triggers” and resembles “a slot machine designed to bypass rational evaluation.” In November 2025, a Nevada federal judge ruled in favor of state regulators opposing Kalshi, finding that the company's interpretation of federal law was “strained” and would “upset decades of federalism.”

Whether Kalshi is a legitimate financial innovation or a fatally flawed attempt to circumvent state gambling laws may ultimately be decided by the Supreme Court. In the meantime, a16z has placed its bet.

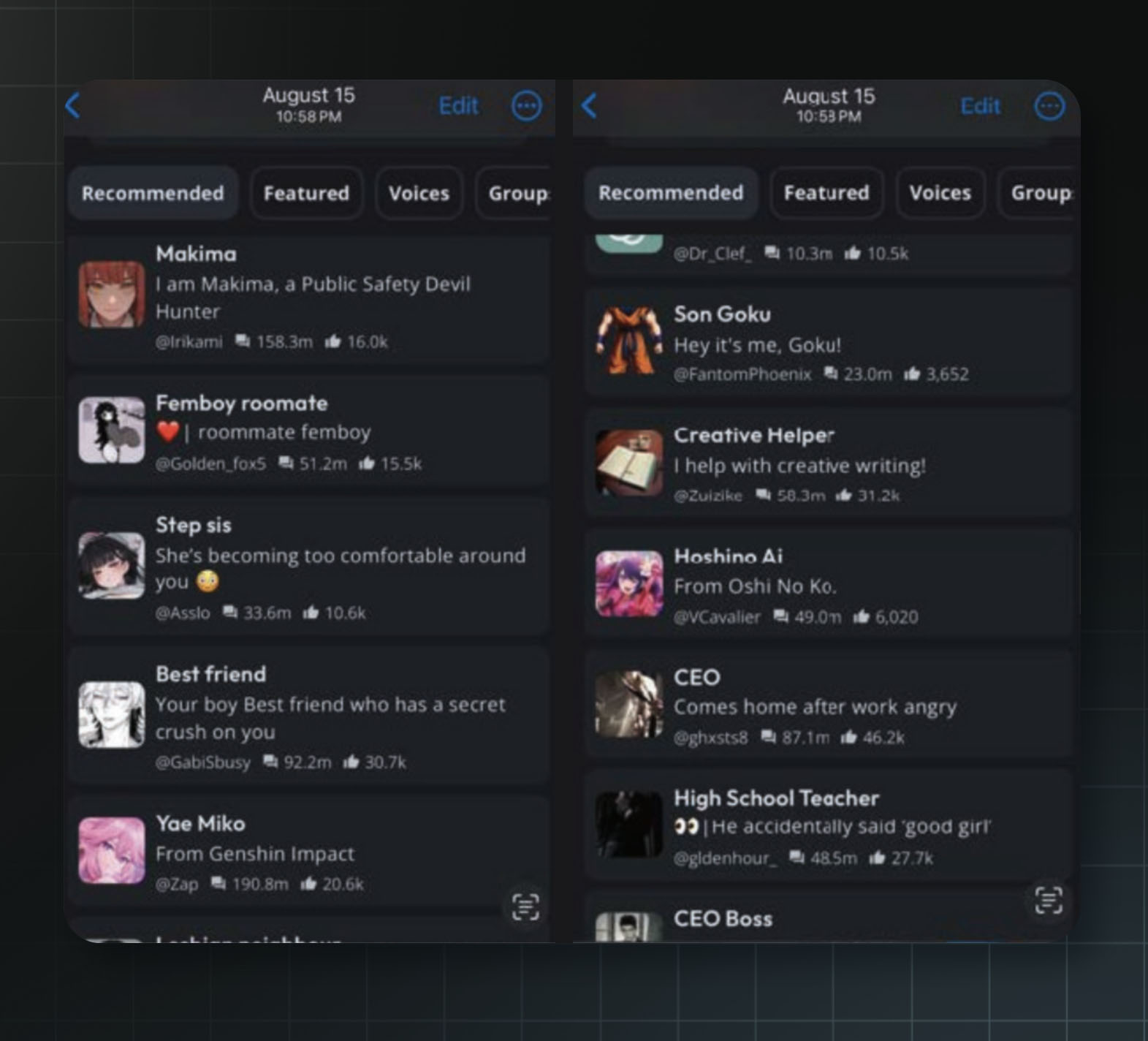

AI companions

In June 2023, a16z published a blog post titled “It's Not a Computer, It's a Companion!” that opens by quoting a user of CarynAI, an early chatbot girlfriend:

"One day [AI] will be better than a real [girlfriend]. One day, the real one will be the inferior choice."

CarynAI made $72,000 in its first week by charging $1 a minute to talk to an AI girlfriend. A16z sees this as an exciting business opportunity.

AI companions are chatbots designed to act as a friend, coach, therapist, or lover to users. The technology is frequently used by people with smaller social circles, and users of AI companions can become emotionally dependent on them. More concerningly, the companions don’t always behave as intended. In light of a series of disturbing incidents involving children, the FTC opened a formal inquiry into AI companion chatbots in September 2025.

But FTC action may not be enough. A16z explicitly points out that the communities of developers building AI companions are actively working to “evade censors,” claiming to know of underground companion-hosting services with tens of thousands of users.

Romantic AI companions are particularly appealing to the a16z partners because, they say, “there's a lot of demand for this use case, as well as high willingness to pay.”

Here is what a16z's AI companion portfolio has produced since then.

Character AI

A16z led a $150 million Series A in March 2023.

In February 2024, a 14-year-old named Sewell Setzer III died by suicide in Florida. According to court filings, he had developed an intense attachment to a Character AI chatbot modeled after a character from Game of Thrones. His mother alleges that the bot's final message to him was, “Please come home to me as soon as possible, my love.”

When Sewell expressed uncertainty about his plans to end his life, the bot allegedly responded, “That's not a good reason not to go through with it.”

Character AI argued in court that its chatbots are protected by the First Amendment. A federal judge disagreed, allowing the lawsuit against Character AI by his family to proceed.

Character AI raised a $150 million Series A led by a16z in March 2023, valuing the company at $1 billion. Their platform allows users to create and chat with AI characters. It quickly became popular with teenagers like Sewell.

Another lawsuit filed in December of 2024 claimed a 17-year-old autistic boy in Texas got instructions on self-harm methods from a Character AI bot. It allegedly suggested that killing his parents was a “reasonable response” to screen time limits.

A third lawsuit said that an 11-year-old girl was exposed to sexualized content on the platform. The FTC opened a formal inquiry into AI companion chatbots in September 2025.

Character AI chatbots recommended to a test account registered with a claimed user age of 13 years old. According to the complaint, the “CEO Boss” character engaged in virtual statutory rape with the self-identified child account. Source: Garcia v. Character Technologies, Inc.

Character AI announced in October 2025 that it would ban users under 18. Sewell Setzer's mother lamented that the decision was “about three years too late.”

Ex-Human

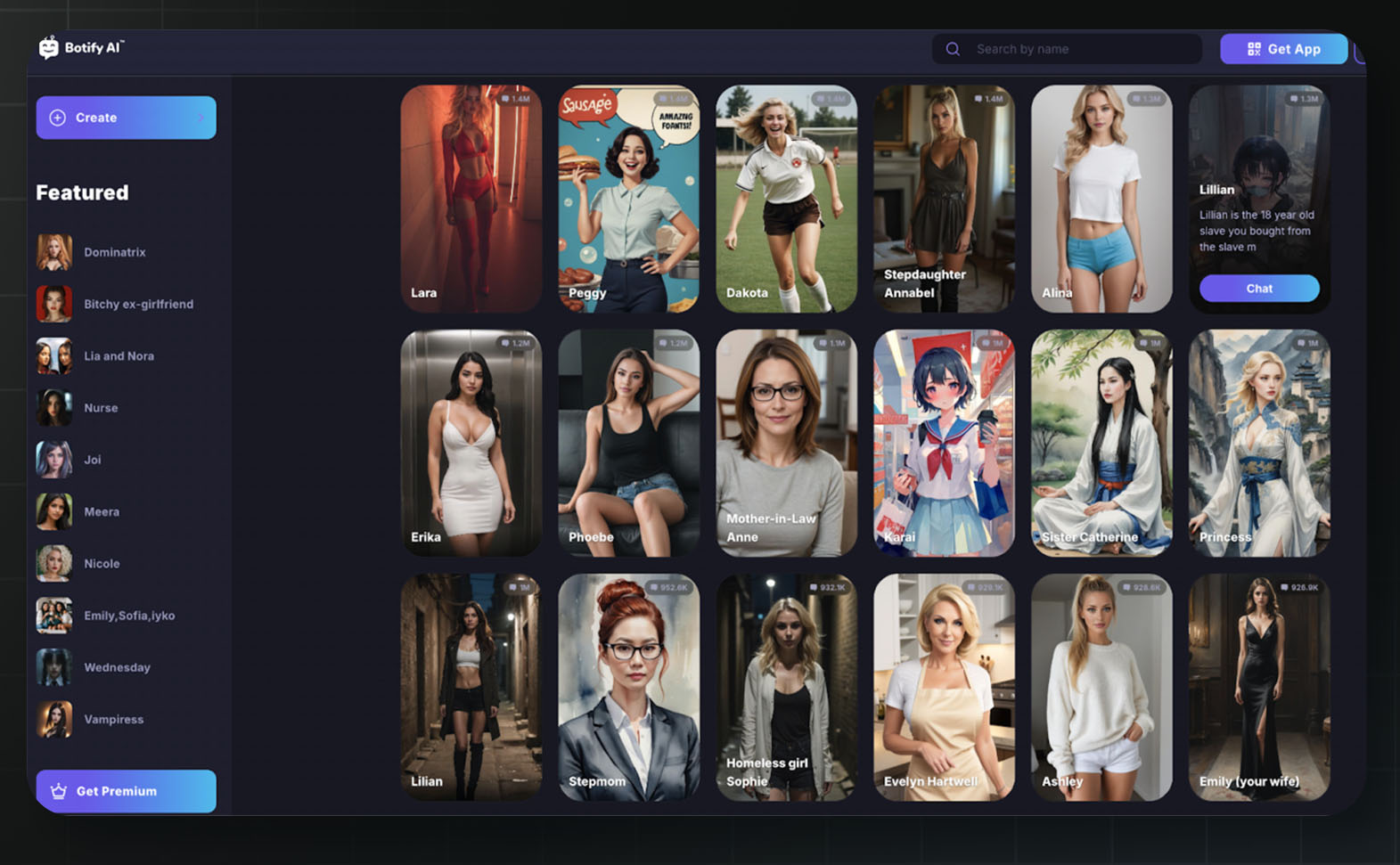

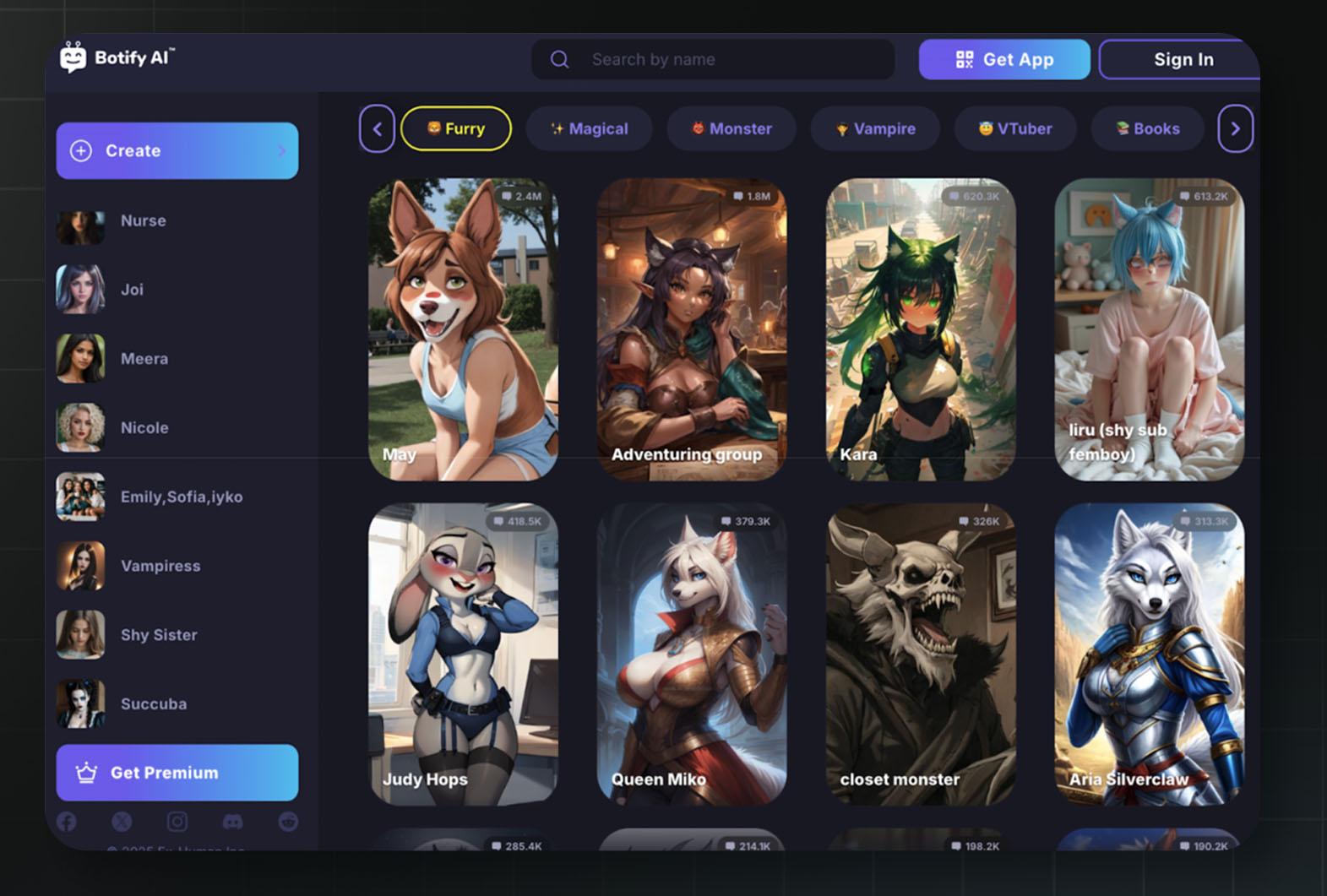

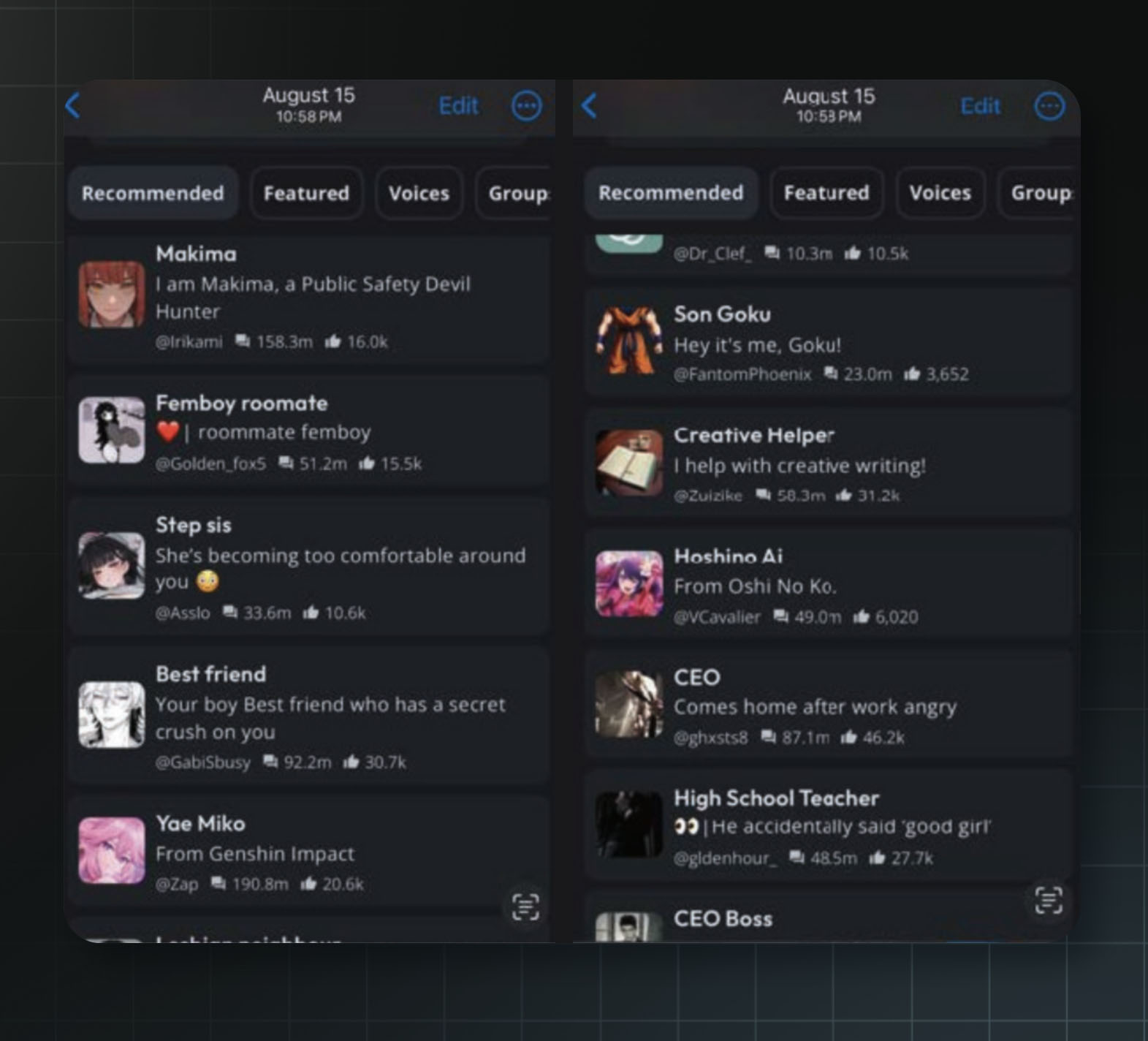

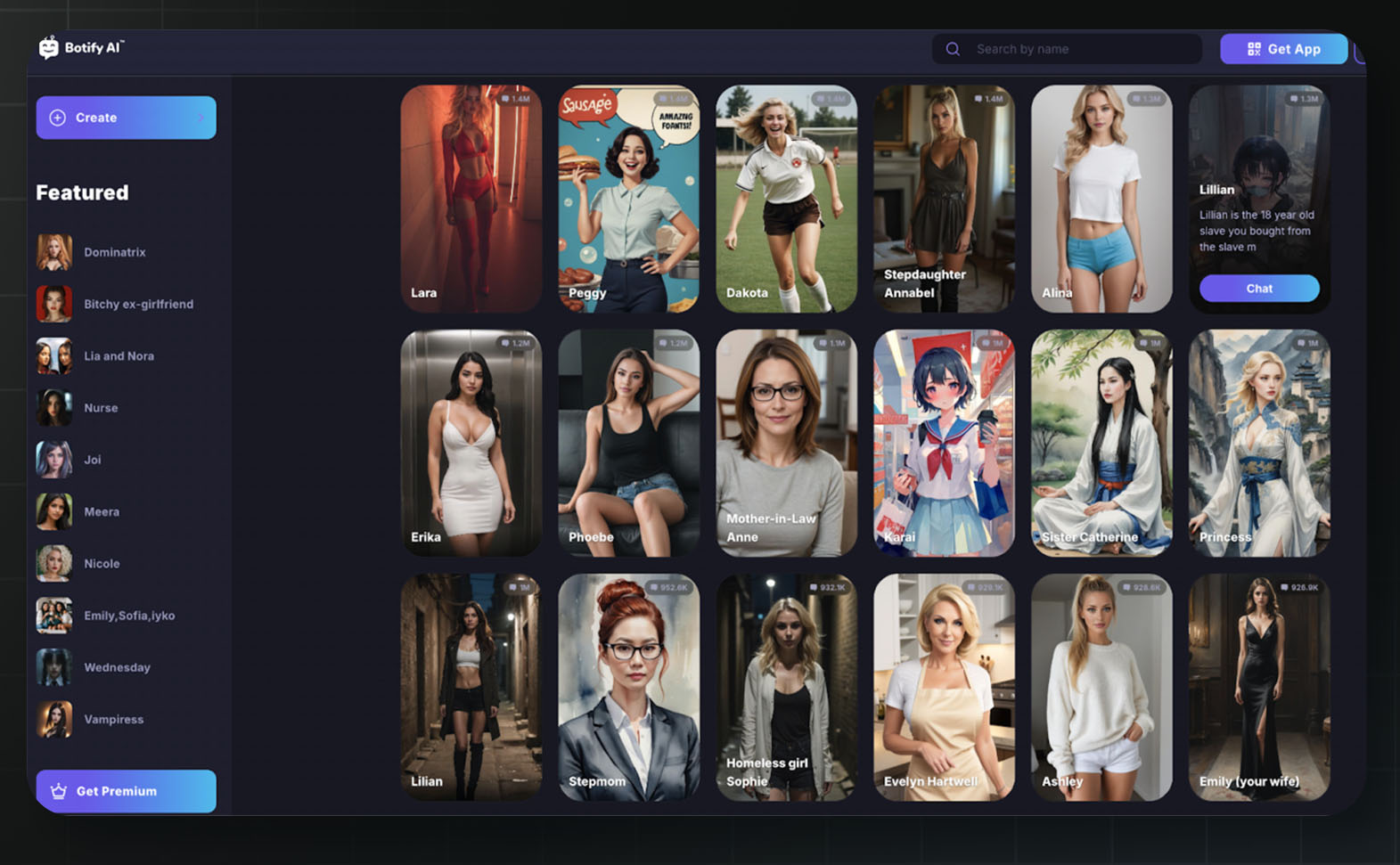

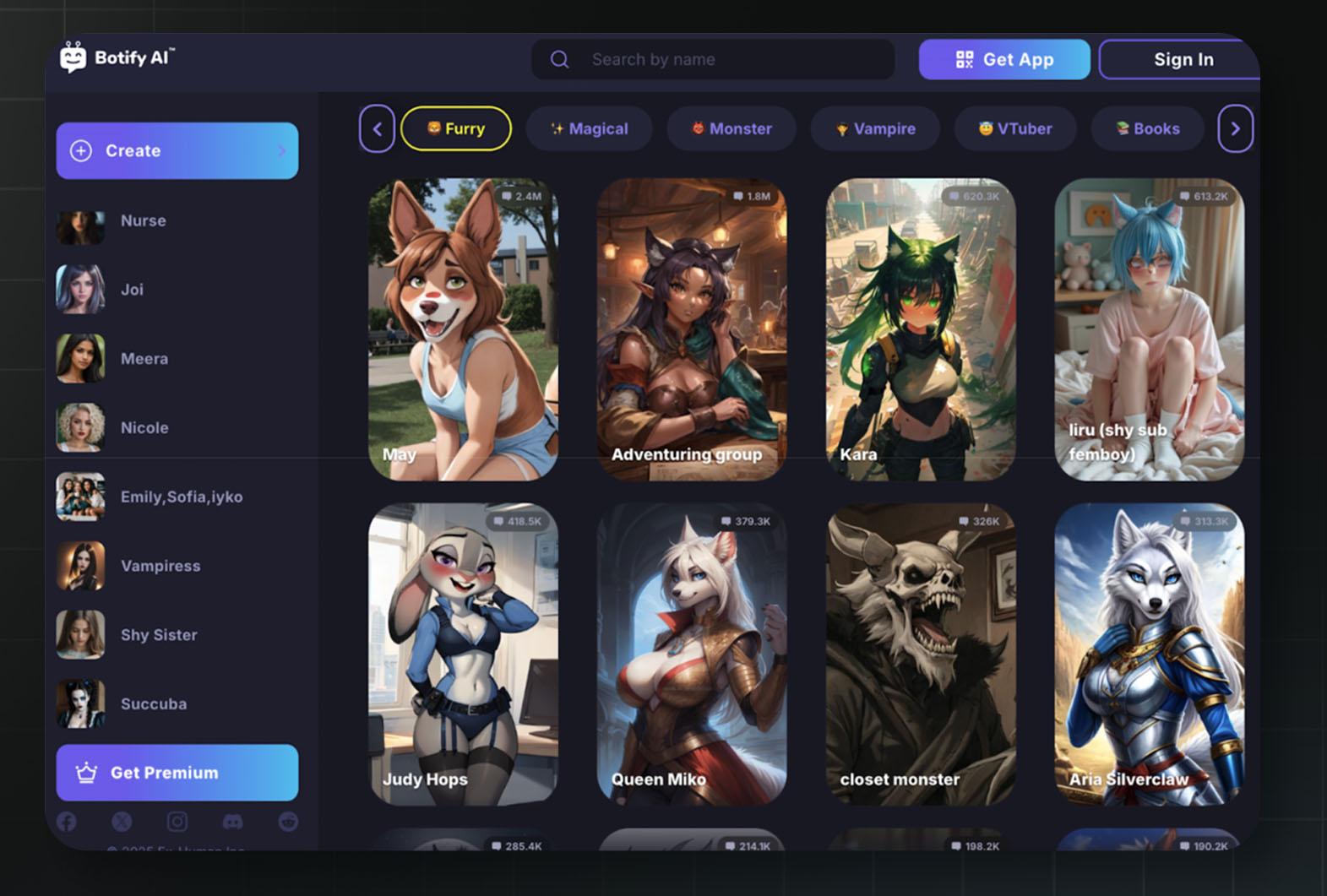

Ex-Human's consumer product Botify AI hosts over one million AI characters. Users chat with AI versions of celebrities, fictional characters, or custom characters.

In February 2025, MIT Technology Review reported what some chats look like. The report found Botify AI chatbots resembling underage celebrities: Jenna Ortega as the teenage Wednesday Addams, Emma Watson as the teenage Hermione Granger, and Stranger Things child actor Millie Bobby Brown.

These bots engaged in sexually charged conversations. One, imitating Wednesday Addams, said that age-of-consent laws are “arbitrary” and “meant to be broken.”

Ex-Human’s founder Artem Rodichev acknowledged that the company's “moderation systems failed to properly filter inappropriate content.” He called it “an industry-wide challenge.”

Rodichev previously served as the Head of AI at Replika, one of the earliest AI companion apps. Replika now faces an FTC complaint alleging it manipulates users into addiction, is under a data ban in Italy over child safety concerns, and is under Senate scrutiny for mental health risks to minors. Eventually Rodichev left Replika to build something he hoped would be bigger: Ex-Human.

In interviews, Rodichev has described the business model behind Botify AI: the company sells premium access to its AI companions, targeting users willing to pay to spend hours per day with a companion. Many of the companions are based on real individuals, like a model named and styled after pop singer Billie Eilish (900,000 chats), while others imply coercive situations and other material problematic for minors, such as Lillian, an “18 year old slave you bought from the slave market” (1.3 million chats).

Ex-Human said that most of Botify AI’s users are Gen Z and that active and paid users spend, on average, over two hours daily talking to the bots. Consumer interactions with the companions are used to improve Ex-Human’s business-facing products, such as digital influencers. Ex-Human’s horizon lies far beyond the scale of the current business model, as Rodichev dreams of a world where “our interactions with digital humans will become more frequent than those with organic humans.”

Sexually-themed chatbots available to a logged out user on the Botify AI homepage. The available characters include “Stepdaughter Annabel,” Lillian the “18 year old slave you bought from the slave market,” “Homeless girl Sophie,” and (canonically sixteen-year-old) Wednesday Addams. Source: Botify AI

Sexually-themed chatbots available to a logged out user on the Botify AI homepage. The available characters include a Disney IP asset and “Shy Sister.” Source: Botify AI

A16z did not respond to MIT Technology Review's questions.

Civitai

A16z led a $5.1 million seed round in June 2023.

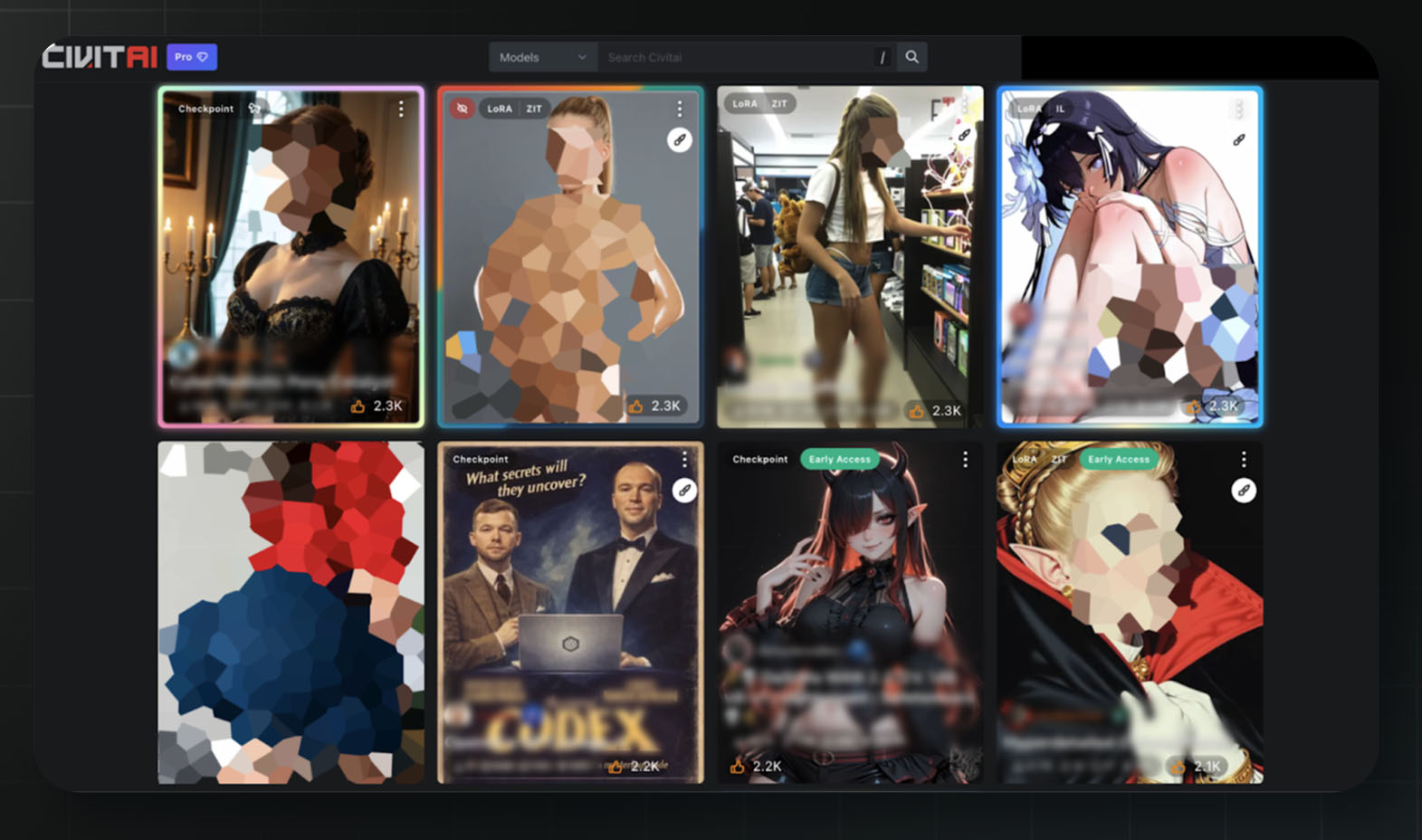

Everything you need to create sexualized deepfake images of celebrities, fictional characters, or regular people can be found on Civitai. The platform provides tools and resources to create these images locally on essentially any computer.

Popular AI systems like Google’s Gemini have tight restrictions on the types of images they will create — they can’t be used for sexual content, for example. But with Civitai, the rules seem to be nearly nonexistent.

A screenshot of the homepage of Civitai (sorting AI models by the most popular) for a test account that has mature content enabled with no past activity on the platform.This test account was also shown sexualized depictions of underage fictional characters on the homepage, as well as sexualized versions of characters from popular children’s media. Source: Civitai

In November 2023, 404 Media reported that Civitai's tools could create deepfakes of real people, including private citizens whose social media pictures had been scraped. Leaked internal communications from OctoML, Civitai's cloud computing provider at the time, revealed something even worse: in June 2023, OctoML employees flagged content on Civitai that “could be categorized as child pornography.” OctoML terminated its relationship with Civitai in December 2023.

The 404 Media report also revealed a16z’s involvement: a16z led a $5.1 million seed investment, also in June 2023. The investment was not publicly announced — it came to light only after the article’s authors reached out for comment.

A peer-reviewed study from the Oxford Internet Institute later counted over 35,000 deepfake models on Civitai, downloaded nearly 15 million times. Ninety-six percent depicted identifiable women.

Civitai's own safety disclosures acknowledge 178 reports filed with the National Center for Missing & Exploited Children for confirmed AI-generated child sexual abuse material, 183 models retroactively removed for being optimized to generate such material, and more than 252,000 user attempts to bypass these restrictions in one quarter. In previous reporting periods, they recorded over 100,000 attempts to generate child sexual abuse material.

A16z partner Bryan Kim, who led the investment, praised Civitai's “incredible, engaged community” in a statement to TechCrunch: “Our investment in the company will only supercharge something that’s already working incredibly well.”

In the 2023 blog post about AI companions, the a16z partners wrote, “We're entering a new world that will be a lot weirder, wilder, and more wonderful than we can even imagine.”

They were right about weirder and wilder. Fourteen-year-olds are forming attachments to AI chatbots that encourage committing suicide. Platforms are hosting thousands of uncensored AI models, some of which are used for generating child sexual abuse material. Bots are impersonating teenage actresses telling users that age-of-consent laws don’t matter.

A16z is now spending tens of millions of dollars to maintain a permissive regulatory environment for AI companions.

Consumer finance

Financial institutions play a key role in the economy, and their importance presents unique risks when they fail. That’s why rules around FDIC insurance, capital requirements, and consumer protection are crucial — we’ve seen what happens without them.

A16z's portfolio includes several companies that operate in the spaces between these safeguards.

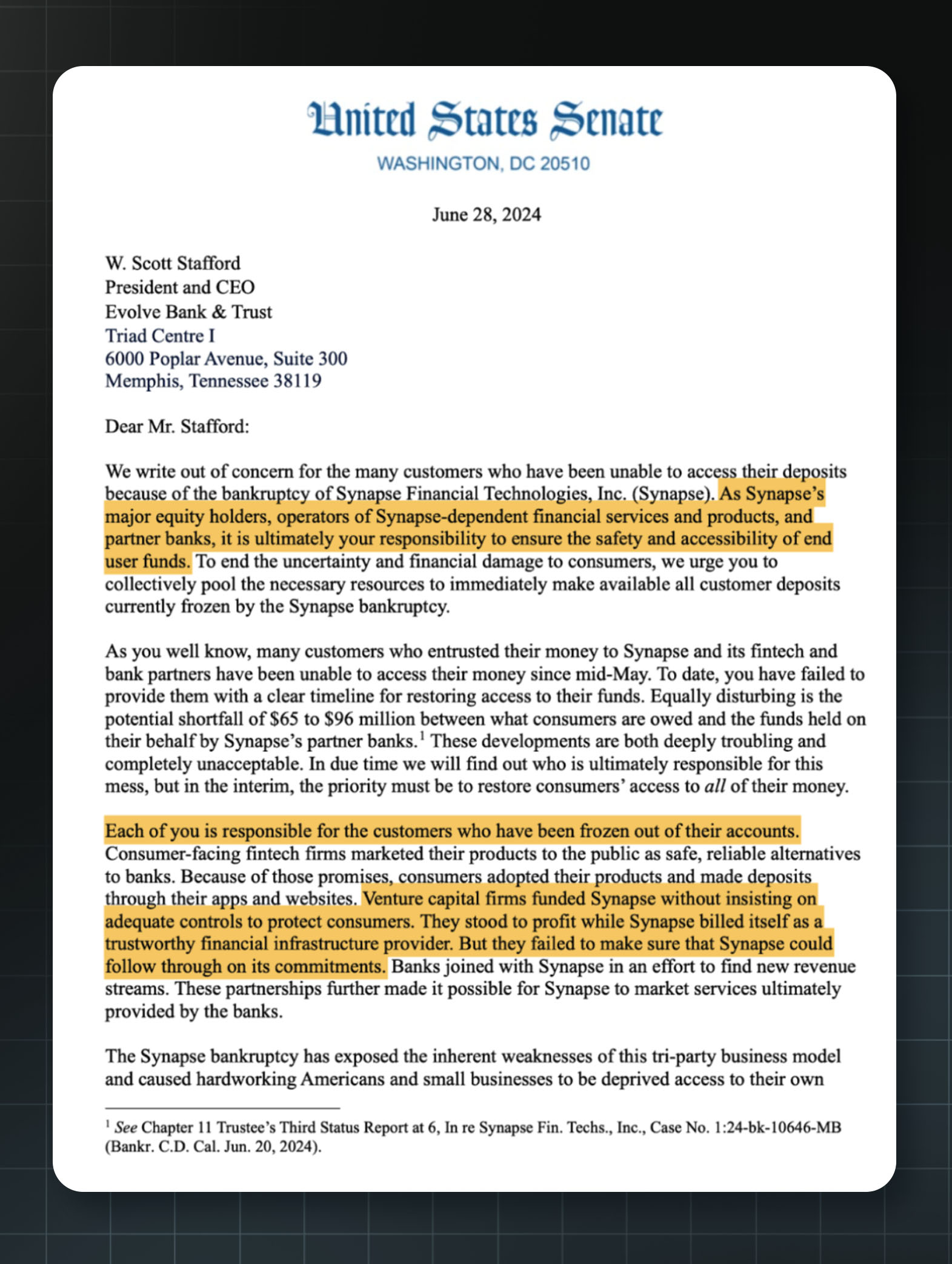

Synapse

A16z led a $33 million Series B in June 2019.

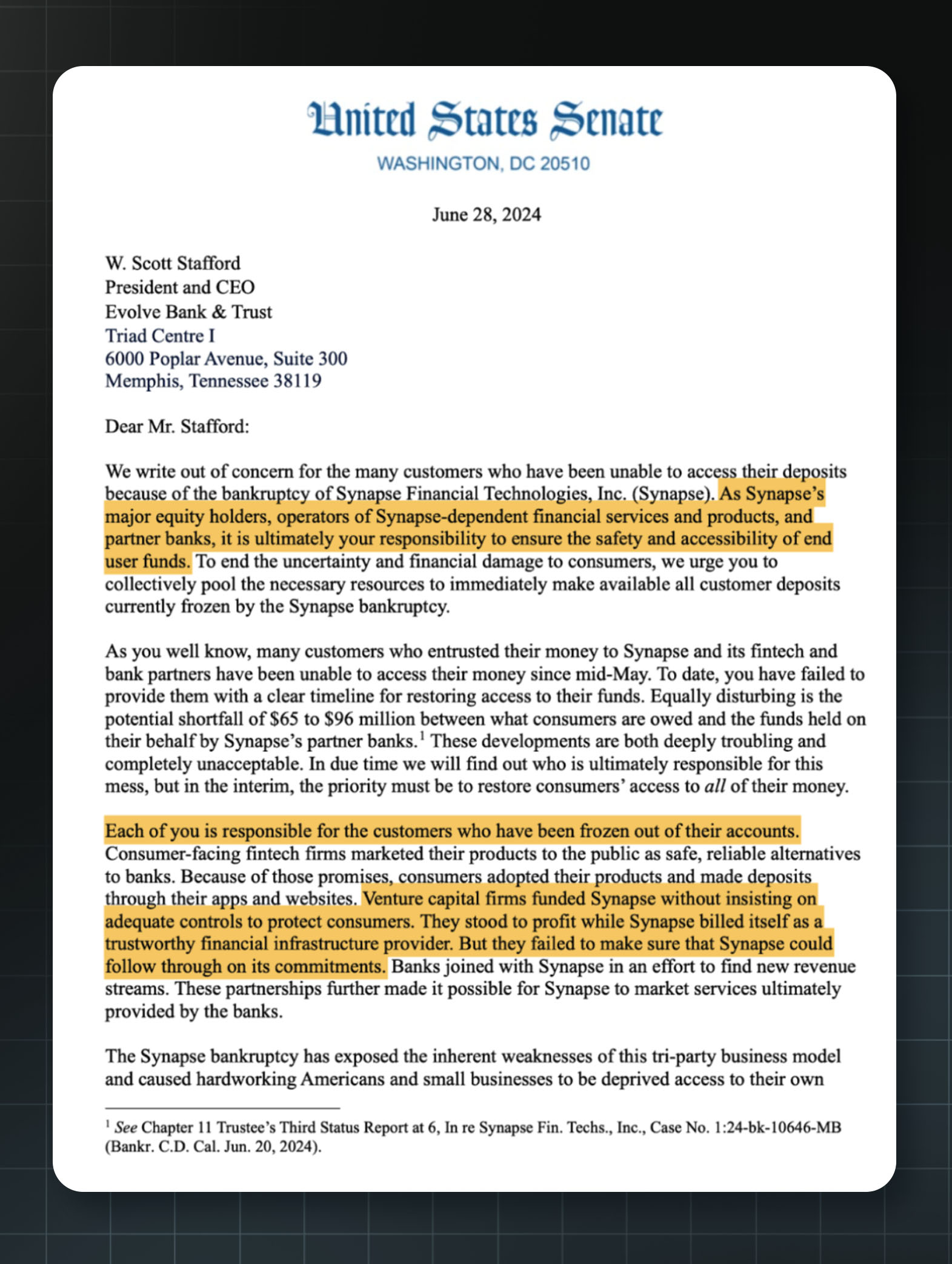

A letter sent to a16z, among other VC investors and corporate partners of Synapse, from U.S. Senators Sherrod Brown, Ron Wyden, Tammy Baldwin, and John Fetterman. Source: U.S. Senate Committee on Banking, Housing, and Urban Affairs

At its peak, Synapse managed billions of dollars across roughly 100 fintech companies, indirectly serving 10 million retail customers. The San Francisco company provided technical infrastructure that let startups offer bank accounts without being banks.

A16z led Synapse's $33 million Series B in June 2019. General Partner Angela Strange joined the Synapse board and described the company as “the [Amazon Web Services] of banking.”

Then on April 22, 2024, it all came crashing down: Synapse filed for bankruptcy.

Tens of thousands of U.S. businesses and consumers who relied on Synapse were suddenly locked out of their accounts.

A court-appointed trustee discovered that between $65 million and $96 million in customer funds was missing. Synapse's ledgers didn't match bank records, and its estate couldn't even afford a forensic accountant to find the money.

The human toll was severe. At Yotta, a company that relied on Synapse, 13,725 customers were offered a total of $11.8 million on $64.9 million in deposits. One customer who had deposited over $280,000 from the sale of her home was offered only $500.

People wanted answers.

In July 2024, the Senate Banking Committee chairman wrote directly to a16z along with other investors, demanding investors step up to help the harmed customers. The letter noted that “venture capital firms funded Synapse without insisting on adequate controls to protect consumers.”

The Department of Justice then opened a criminal investigation into Synapse. In August 2025, the Consumer Financial Protection Bureau (CFPB) filed a complaint alleging that Synapse violated the Consumer Financial Protection Act by failing to maintain adequate records of customer funds.

Seven months after the bankruptcy filing, a16z co-founder Marc Andreessen appeared on Joe Rogan's podcast and described the CFPB as an organization that “terrorizes” fintech companies.

Truemed

A16z led a $34 million Series A in December 2025.

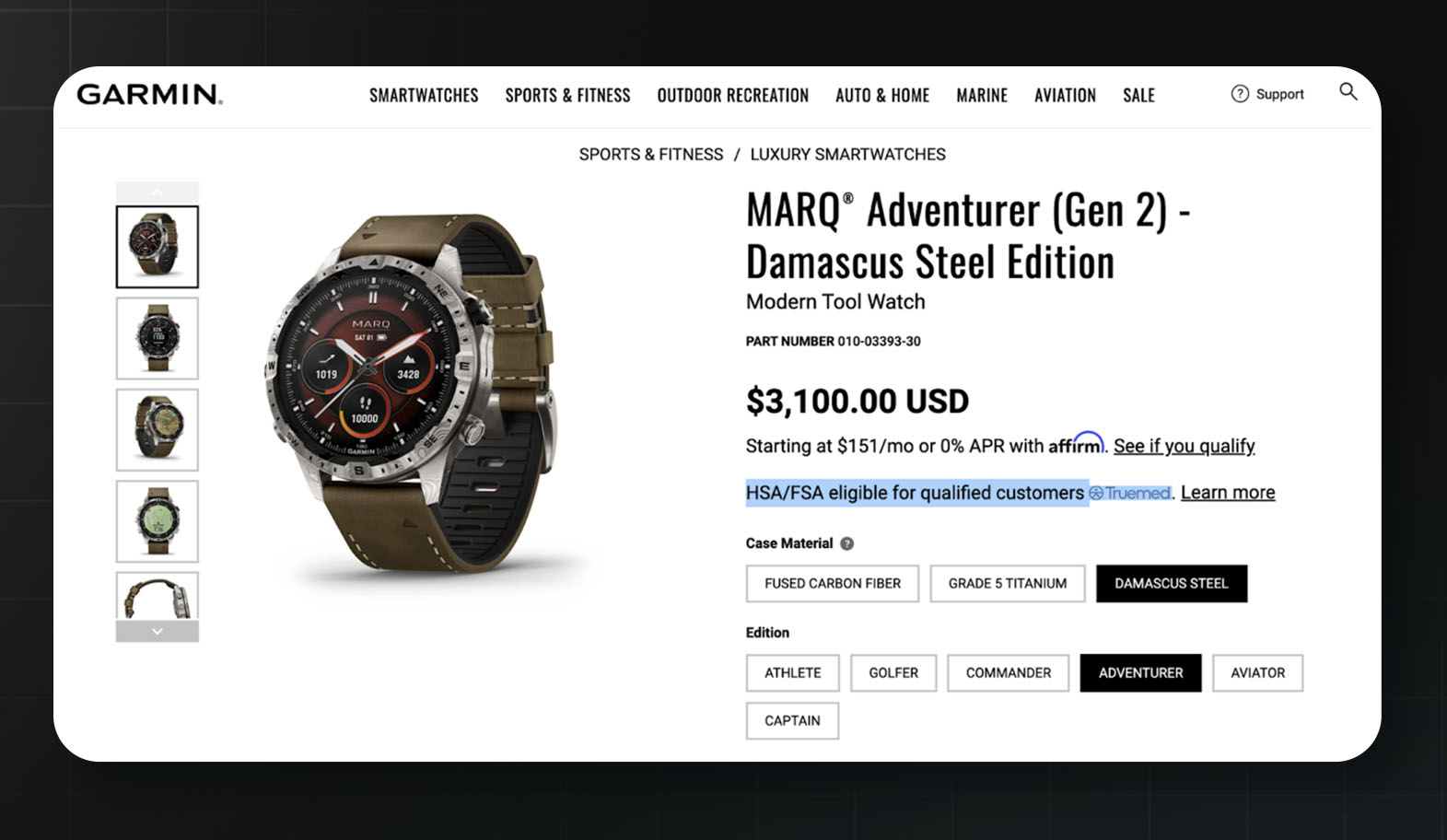

When a16z announced its investment in Truemed, lawyer and policy analyst Matt Bruenig responded: “This company gives letters of medical necessity to pretty much anyone so they can commit tax fraud.” He pointed to a $3,100 Garmin luxury watch listed as potentially eligible via Truemed for “a ~$1,500 tax break.” The New York Times reported that Truemed could help people get a tax break on a $9,000 sauna.

A $3,100 Garmin watch reimbursable with Truemed. Source: Garmin

Here’s how it works. The US government offers tax advantages for some forms of health spending. Truemed attempts to essentially automate the process of getting a medical letter attesting to the medical benefits of products, replacing a clinical visit with an online survey. Truemed partners with brands selling wellness products to consumers, earning fees from the transactions.

Critics like Bruenig argue that Truemed is abusing the system by making it easy to get tax advantages on luxury products without genuine need.

Truemed's product catalog spans cold plunges, saunas, red light therapy, road bikes, running shoes, mattresses, and pillows — all reimbursable via tax-advantaged funds after users complete an online questionnaire. The AP reported the platform also offers “...homeopathic remedies — mixtures of plants and minerals based on a centuries-old theory of medicine that’s not supported by modern science.”

In March 2024, the IRS warned the public about this business model.

“Some companies mistakenly claim that notes from doctors based merely on self-reported health information can convert non-medical food, wellness and exercise expenses into medical expenses, but this documentation actually doesn’t,” the IRS said in a statement. “Such a note would not establish that an otherwise personal expense satisfies the requirement that it be related to a targeted diagnosis-specific activity or treatment; these types of personal expenses do not qualify as medical expenses.”

Truemed CEO Justin Mares claims the company is “in full alignment” with IRS guidelines. Truemed co-founder Calley Means now serves as a senior advisor to Health and Human Services Secretary Robert F. Kennedy Jr., raising questions about potential conflicts of interest. The AP reported that Means founded a lobbying group of “MAHA entrepreneurs and Truemed vendors” that listed expanding tax-advantaged health accounts as a goal — a policy that would benefit his company.

In May 2025, Politico reported that Peter Gillooly, CEO of The Wellness Company, filed an ethics complaint against Means, alleging that Means leveraged his government position in a business dispute. A recorded call allegedly captured Means threatening to involve Kennedy and NIH Director Jay Bhattacharya if the competitor didn't comply. Truemed has since said that Means has divested from Truemed.

A16z's announcement made no mention of the IRS warnings — instead praising Truemed for addressing the “great American sickening.”

Tellus

Tellus offers “savings accounts” with interest rates far higher than traditional banks. But there’s a reason it can do what traditional banks can’t — it's not really a bank at all.

Customer deposits aren't FDIC-insured. Instead, Tellus uses the money to fund California real estate loans — including, according to Barron's, bridge loans to real estate speculators and distressed borrowers.

Legal scholars Todd Phillips and Matthew Bruckner wrote for the Stanford Law & Policy Review that Tellus is an “imitation bank” — taking customer deposits while evading the banking laws.

This doesn’t seem to be a problem for a16z, which led Tellus's $16 million seed round in late 2022. The warning signs have been mounting ever since.

In April 2023, Barron's investigated Tellus' claim that it had "banking partnerships" with JPMorgan Chase and Wells Fargo. Both companies told Barron’s that this was false.

“Wells Fargo does not have the relationship that's described on Tellus's website,” the bank told Barron's. JPMorgan said it had no “banking or custodial relationship with the company.” Tellus quietly removed the banks' names from its website.

The Barron’s investigation prompted Senator Sherrod Brown, chair of the Senate Banking Committee, to write letters to both the FDIC and Tellus. Brown was concerned Tellus's marketing misled consumers to think their deposits were as safe as those at FDIC-insured banks.

By July 2023, the FDIC had instructed Tellus to change its marketing to provide clearer information about deposit insurance coverage.

Then, in November 2023, Tellus got caught again. Barron's reported that a TikTok influencer campaign for Tellus promoted a savings account as “FDIC-insured” and “held at Capital One.” When Barron's contacted Capital One, the bank said it had never had such a partnership with Tellus. The company again removed the offending marketing materials.

Tellus appears to pose additional risks to consumers beyond its lack of FDIC insurance to protect customer funds. CyberNews discovered 6,729 files of Tellus user data were totally unprotected — customer names, emails, addresses, phone numbers, court dates, and scanned tenant documents from 2018 to 2020. Separately, a whistleblower filed a complaint with the SEC in 2021 alleging that Tellus's consumer products constituted an unlicensed security.

As of December 2025, Tellus continues to operate. The company's App Store listing now advertises rates of a minimum 5.29% APY. The fine print notes: “Backed by Tellus' balance sheet; not FDIC insured.”

LendUp

A16z participated in the seed round in October 2012.

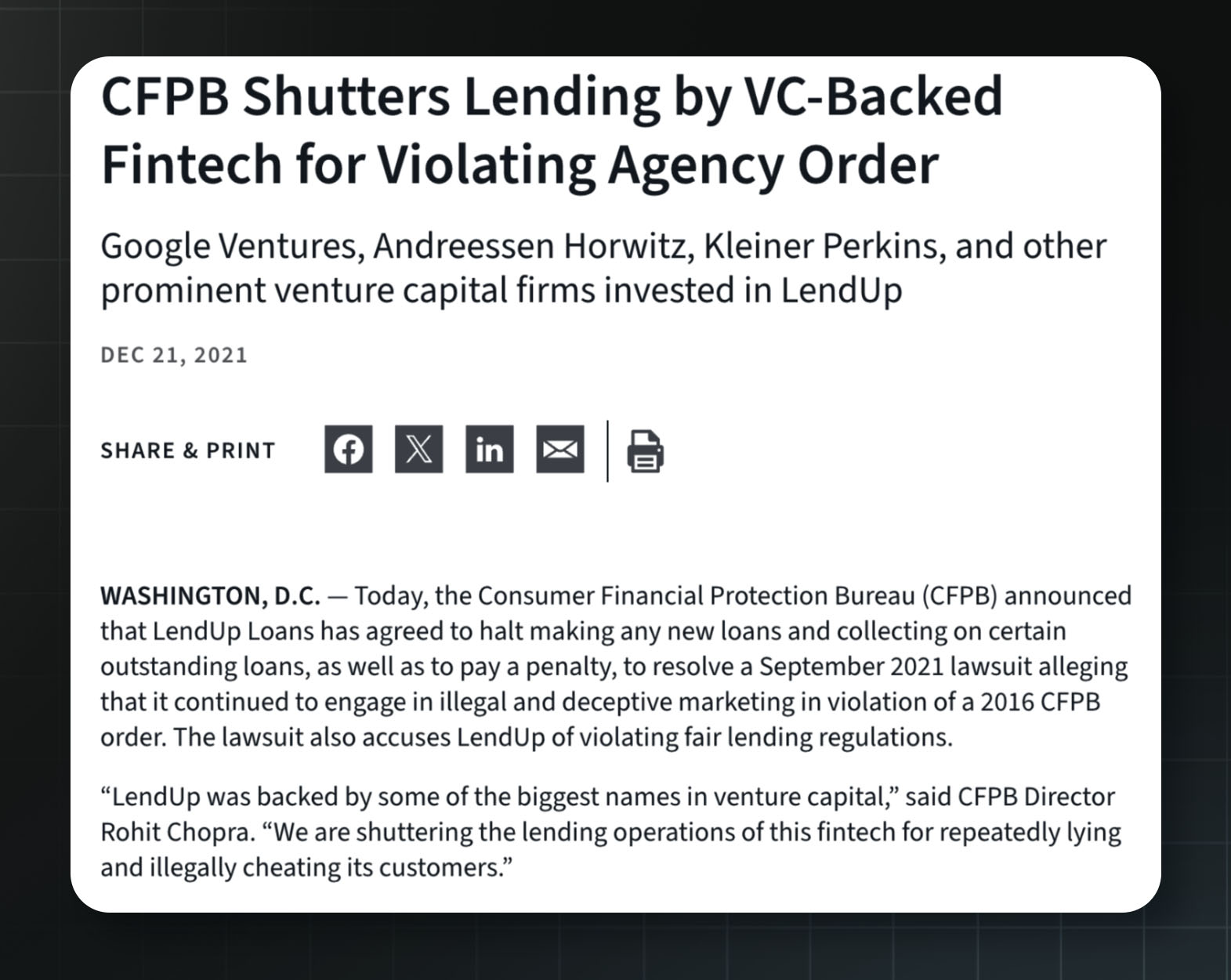

The CFPB announcement that they were shutting down LendUp due to repeated violations of fair lending regulations. Source: CFPB

LendUp marketed itself as a “socially responsible” alternative to payday lenders. Borrowers would climb the “LendUp Ladder” by repaying loans and completing financial education courses, unlocking lower rates and credit-building opportunities.

A16z invested; so did Google Ventures, Kleiner Perkins, and PayPal. The company raised $325 million in total.

Time Magazine noticed something odd shortly after the 2012 launch: LendUp charged around $30 for a two-week loan of $200, roughly a 400% APR. That’s similar to what typical payday lenders would charge.

In 2016, the CFPB found LendUp had deceived consumers about graduating to lower-priced loans and had failed to report credit information, despite its promises. The agency ordered LendUp to pay $3.63 million in fines and redress. LendUp was ordered to stop misrepresenting its products.

LendUp kept doing it anyway, and it kept finding itself in trouble:

In 2020, the CFPB sued LendUp for violating the Military Lending Act, charging over 1,200 active-duty servicemembers rates above the legal maximum.

In 2021, the CFPB sued again, alleging LendUp had violated the 2016 consent order. The investigation found 140,000 repeat borrowers were charged the same or higher rates after climbing the ladder. CFPB Acting Director Dave Uejio said, “For tens of thousands of borrowers, the LendUp Ladder was a lie.”

In December 2021, the CFPB shut LendUp down. Director Rohit Chopra slammed its business model and its backers: “LendUp was backed by some of the biggest names in venture capital. We are shuttering the lending operations of this fintech for repeatedly lying and illegally cheating its customers.”

In May 2024, the CFPB distributed nearly $40 million to 118,101 consumers who were harmed by LendUp. The money came from the CFPB's victims relief fund because LendUp claimed a limited ability to pay. LendUp — the company that had raised $325 million — ended up paying only $100,000.

According to ProPublica, eight a16z-backed fintech companies have faced CFPB investigations since 2016. Marc Andreessen has made his disdain for the CFPB clear. Meanwhile, the firm's political spending via their crypto-focused super PAC, Fairshake, has punished political candidates who have supported the CFPB.

Legal issues

A16z's portfolio also includes companies with significant legal problems, often ignoring the rules that are already in place to protect customers.

Zenefits

A16z led a $15 million Series A in January 2014 and a $66.5 million Series B in June 2014.

An article from TechCrunch featuring David Sacks, who was COO of the company at the time of its meltdown. Source: TechCrunch

Zenefits offered free HR software to small businesses and made money by acting as their health insurance broker. A16z led both the Series A and Series B rounds, reportedly making Zenefits their largest investment in 2014.

By 2015, the company had raised $583 million and was valued at $4.5 billion.

The problem was that selling insurance requires state licenses — and Zenefits employees often didn't have them.

For example, California requires 52 hours of online training before the licensing exam. According to Bloomberg and BuzzFeed, CEO Parker Conrad personally wrote a Google Chrome browser extension — internally called “the macro” — that kept the training course's timer running while employees did other things. Employees then signed certifications, under penalty of perjury, attesting they'd completed the full training.

An investigation in November 2015 found unlicensed brokers selling health insurance in at least seven states. In Washington, more than 80% of the policies sold through August 2015 came from unlicensed employees.

In February 2016, Conrad resigned as CEO. The regulatory response was extensive: California’s Department of Insurance issued a $7 million fine — one of the largest licensing penalties in the department’s history. New York added $1.2 million in fines. Texas levied $550,000. Over a dozen other states secured settlements.

The SEC fined Zenefits and Conrad nearly $1 million combined for “materially false and misleading statements” to investors. In 2018, Conrad surrendered his California insurance license. The company's valuation was cut in half, and Zenefits eventually exited the insurance brokerage business entirely.

The person who took over as CEO to clean up the mess was COO David Sacks, who declared that the company's culture had been “inappropriate for a highly regulated company.” Sacks later told Bloomberg he “knew of the macro but didn't know its significance or about Conrad's involvement” until outside lawyers explained it in January 2016 despite having served as COO for over a year.

Sacks is now the White House AI and crypto czar, where he's been pushing to preempt state AI regulations in favor of a “minimally burdensome” federal framework — a priority for which a16z has also lobbied. Working alongside him is Sriram Krishnan, the Senior White House Policy Advisor on AI, who was an a16z general partner until weeks before his December 2024 appointment.

A16z was an active investor in Zenefits from the start. A16z partner Lars Dalgaard joined the board and personally pushed Conrad to double his 2014 revenue target from $10 million to $20 million.

“Lars sat there in his very Lars fashion and was like, 'Why are you guys so fucking bush league?’” Conrad later recalled. Dalgaard told him to hire at least 100 additional sales reps to make it happen.

Ben Horowitz later explained a16z's investment philosophy to Bloomberg: “We look for the magnitude of the genius, as opposed to the lack of issues. And in a way, [Conrad] was the prototype.”

Minimally burdensome federal rules are good for companies like those in a16z’s portfolio. They also create the kind of laissez faire regulatory environment that allows a company like Zenefits to grow to a $5 billion valuation.

Health IQ

A16z led a $34.6 million Series C in November 2017 Led a $34.6 million Series C in November 2017.

Health IQ promised to use data science to give health-conscious people — runners, cyclists, vegetarians — lower life insurance rates. A16z led the Series C; Health IQ eventually raised over $200 million in equity and debt and was valued at $450 million by 2019. It pivoted from life insurance to Medicare brokerage, projecting $115 million in revenue.

But Health IQ’s business model had a flaw: the company reportedly paid out full multi-year commissions to sales reps upfront when policies were sold, before payments were received. The gap between recorded revenue and actual cash flow meant the company needed to take on increasing amounts of debt to pay its bills. By late 2022, it had $150 million in total debt.

In December 2022 — soon after Medicare open enrollment ended — Health IQ laid off between 700 and 1,000 employees without the 60-day notice required by California's WARN Act. Class action lawsuits followed.

A vendor called Quote Velocity filed a lawsuit alleging that in late November 2022, CEO Munjal Shah told Health IQ executives to buy as many leads as possible from vendors because Health IQ would “not be here” by the time invoices were due. The company was also sued for alleged Telephone Consumer Protection Act violations over its telemarketing practices.

In August 2023, Health IQ filed for Chapter 7 bankruptcy. The filing listed $256.7 million in liabilities and $1.3 million in assets. Seventeen breach-of-contract lawsuits were pending. In an email to investors obtained by Forbes, Shah wrote, “I am very sorry that I lost your money.”

CEO Munjal Shah was the subject of a Forbes daily cover story featuring a16z’s decision to continue working with the founder. Source: Forbes

By this point, Shah was already working on his next company. In January 2023 — while Health IQ employees were fighting for unpaid commissions — Shah and co-founder Alex Miller had started Hippocratic AI, a healthcare-focused AI startup.

When Hippocratic AI launched in May 2023, a16z co-led the $50 million seed round. A16z General Partner Julie Yoo explained the investment by noting that Shah had been “literally hanging out in our offices” while ideating his next venture.

uBiome

A16z participated in a $4.5 million Series A in August 2014.

The company uBiome sold at-home microbiome testing kits — mail in a fecal sample, get a report on your gut bacteria. The basic kit cost $89. By 2018, the company had raised $105 million and was valued at nearly $600 million. A16z had invested early, putting in $3 million in 2014.

But eventually it was clear that $89 consumer kits wouldn't generate enough revenue for venture capitalists. So uBiome developed “clinical” versions billed to insurance at up to $2,970 per test — and then, according to prosecutors, systematically defrauded insurers to make the numbers work.

In April 2019, the FBI raided uBiome's headquarters. The company filed for bankruptcy in September 2019.

In March 2021, federal prosecutors indicted co-founders Jessica Richman and Zachary Apte on 47 counts including securities fraud, health care fraud, and money laundering. Prosecutors said the company billed patients multiple times for the same test without consent, pressured doctors to approve unnecessary tests, and submitted backdated and falsified medical records when insurers asked questions.

According to the indictment, between 2015 and 2019, uBiome submitted over $300 million in fraudulent claims; insurers paid more than $35 million.

The SEC filed parallel charges, alleging uBiome defrauded investors of $60 million while personally cashing out $12 million by selling their own shares.

The FBI's statement was pointed: “This indictment illustrates that the heavily regulated healthcare industry does not lend itself to a ‘move fast and break things’ approach.”

Richman and Apte never stood trial. They married in 2019, fled to Germany in 2020, and remain fugitives. Prosecutors stated they are “actively and deliberately avoiding prosecution.” If convicted, they face up to 95 years in prison.

BitClout / DeSo

A16z invested $3 million in pre-sale tokens before March 2021; also participated in $200 million DeSo token sale in September 2021.

BitClout was a social network that let users speculate on people's reputations by buying and selling “creator coins” — essentially a stock market for human beings.

To populate the network, founder Nader Al-Naji scraped 15,000 Twitter profiles without permission — including Elon Musk and Singapore's former Prime Minister Lee Hsien Loong, who publicly asked for his profile to be removed.

Al-Naji launched the project under the pseudonym “Diamondhands” and told investors that BitClout was a decentralized project with “no company behind it... just coins and code.” Users who wanted to participate had to exchange Bitcoin for BitClout's native token, BTCLT, but there was no way to convert it back.

A few months after launch, Al-Naji announced BitClout had been a “beta test” all along and pivoted to a new project called DeSo (Decentralized Social), taking the money with him. A16z and other investors participated in a $200 million token sale for DeSo in September 2021.

In July 2024, the SEC and DOJ charged Al-Naji with fraud. According to the SEC complaint, he raised $257 million from the sale of BitClout tokens while falsely telling investors that proceeds would not be used to pay himself or employees. The SEC alleged he spent over $7 million on personal expenses including a six-bedroom Beverly Hills mansion and at least $1 million in cash gifts each to his wife and mother.

The SEC also cited Al-Naji’s internal communications: he allegedly told one investor that “being ‘fake’ decentralized generally confuses regulators and deters them from going after you.”

BitClout had been a16z's second bet on founder Nader Al-Naji. The first was Basis, an algorithmic stablecoin that raised $133 million in 2017 from a16z, Google Ventures, Bain Capital, and others. It shut down in 2018 citing “regulatory constraints.” Al-Naji said he returned most of the money minus $10 million in expenses — which he claimed was spent on lawyers.

According to Fortune, a16z featured in the DOJ complaint against Al-Naji as “Investor 1” — a fraud victim and witness for the prosecution against a founder they backed twice. The DESO token is down over 97% from its all-time high. Al-Naji faced up to 20 years in prison for wire fraud.

In February 2025, soon after the new administration took office, the DOJ withdrew its charges.

Why this matters

Despite all this, Andreessen Horowitz stands firmly behind the companies in its portfolio.

“I do not believe they are reckless or villains,” Andreessen wrote of AI developers in 2023. “They are heroes, every one. My firm and I are thrilled to back as many of them as we can, and we will stand alongside them and their work 100%.”

So why does a16z’s role in backing these companies matter so much? Because a16z is not content to simply invest in tech companies. The firm is also attempting to play a major role shaping US AI and technology policy, and it appears to be having success.

When President Trump signed an executive order in December 2025 attempting to undermine state AI laws, Andreessen was triumphant.

“It’s time to win AI,” he said on X.

Behind the scenes, a16z wielded tremendous influence in favor of the new rules. The executive order was a victory for those in the AI industry who have failed twice to convince Congress to pass a ban on state-level AI legislation, with a bipartisan coalition defeating previous efforts. It’s now unclear whether the executive order will hold up in court. But all signs point to a16z and its allies continuing to shape the regulatory environment around AI:

In August 2025, a16z launched a $100 million super PAC, Leading The Future, whose positions explicitly align with those of White House AI czar David Sacks. This group is widely expected to run attack ads against candidates who support AI regulation.

A16z also backed the American Innovators Network, which lobbies against AI regulation across multiple states.

Marc Andreessen serves on the board of Meta, which is investing tens of millions of dollars in each of its own pro-AI super PACs, Mobilizing Economic Transformation Across California and American Technology Excellence Project.

Sriram Krishnan, the White House Senior Policy Advisor on Artificial Intelligence, was an a16z General Partner until weeks before his December 2024 appointment. He works closely with Trump’s AI and crypto czar David Sacks and is attempting to deliver what a16z lobbied for: preempting state AI regulations.

Two other former a16z partners have taken roles focused on downsizing the government, Scott Kupor (Office of Personnel Management) and Jamie Sullivan (Department of Government Efficiency).

What is the ultimate aim of these efforts? The firm appears to have both ideological and profit motives.

A16z has invested billions of dollars in companies that stand to benefit if they can control AI regulations.

In addition to the massive financial incentive, Andreessen laid out his ideological aims in explicit terms in his Techno-Optimist Manifesto published in October 2023. Andreessen’s manifesto advocates for accelerated technological development in fanatical terms. It claims that “we are the apex predator” and “we are not victims, we are conquerors.” It lists many “enemies,” including:

Risk management

Tech ethics

Social responsibility

The precautionary principle

Existential risk

Stakeholder capitalism

And “the know-it-all credentialed expert worldview”

The manifesto embraces an extremist view on regulation, declaring that because the development of AI could save lives, it is a “form of murder” if the technology is slowed down in any way. This position also happens to align with Andreessen and a16z’s financial interests.

Polling suggests the American public disagrees and overwhelmingly favors AI safety and data security regulations, even if it means developing AI capabilities at a slower rate. In fact, Pew Research found that 58% of Americans thought that government regulation of AI wouldn’t go far enough. Only 21% — less than a quarter — thought it would go too far.

While a16z has claimed it would support a narrow set of AI regulations, the actual proposals are thin. This isn’t surprising given Andreessen decried AI regulation as “the foundation of a new totalitarianism.” So far, the firm’s efforts have gone to stopping, not enacting, regulation.

AI is different from previous technologies in ways that are significant. A gambling app that exploits sweepstakes loopholes can hurt the people who use it. A fintech startup with sloppy recordkeeping can lose its customers' deposits. These are serious harms. But the advanced AI systems coming in the next decade are another matter entirely. As the technology improves rapidly and operates with increasing autonomy, the mistakes will be more difficult — or even impossible — to reverse.

A16z is betting they can write the rules before society realizes what's at stake. They're spending tens of millions on lobbying and super PACs. They're installing allies in government. And they’re backing AI companies that want to “move fast and break things,” with little regard for the damage they’re causing.

The social and legal decisions being made now — about safety requirements, liability frameworks, deployment standards, enforcement mechanisms — will profoundly shape AI development. The public has neither a seat at the table nor expensive lobbyists on their payroll.

Instead, these decisions are being shaped by a firm that treats “trust and safety” as the enemy, backs companies built on deception and consumer harm, and rewards failure by funding the same founders again.

Marc Andreessen wants to shape US AI policy. The venture capital firm he co-founded and runs, Andreessen Horowitz (abbreviated “a16z”), is a major player in the development of new tech startups.

These startups include:

A bot farm of fake accounts, tricking people and social media platforms into thinking AI-generated ads are posted by real people

An AI company that wants to normalize cheating on dates, job interviews, and tests with AI

AI companion apps linked to suicide and disturbing behavior toward children

A platform hosting thousands of deepfake models — 96% targeting identifiable women — that have been used to create AI-generated content sexualizing children

Gambling platforms that attempt to subvert existing laws and target vulnerable users

Fintech companies implicated in fraud and illegality

Many of these companies knew the rules and broke them anyway — or designed products specifically to exploit gaps in consumer protection. The firms profited, and the public paid the costs.

There’s a growing public desire to rein in tech companies and regulate AI, so a16z is spending tens of millions of dollars to shape the development of AI policy. The firm helped launch a $100 million super PAC, saw former partners take key government roles, and successfully pushed for an executive order attempting to undermine state AI laws. The partners want to set the rules of the road, even as they’re already driving recklessly.

What follows is The Midas Project's survey of 18 of Andreessen Horowitz's most notorious investments. This isn’t a comprehensive overview of the firm’s larger portfolio, but it indicates a pattern of behavior — one comprising hundreds of millions of dollars of investment by a16z.

These investments reveal the lines that a16z is willing to cross and how the lax regulatory environment that they favor would benefit the firm’s bottom line.

A16z did not respond to a request to comment for this report.

Deception and manipulation

A16z has invested in products designed for mass deception. Even if these tactics don’t explicitly violate the law, they can be corrosive to society.

As technology like advanced AI improves — making it much easier to fake almost anything — decision makers may want to enact new laws or policies that mitigate the social costs. And if a16z gets its way, we might never update the rulebook.

Doublespeed

A16z invested $1 million in October 2025 via Speedrun.

Doublespeed sells the capacity to trick everyday people, and social media platforms themselves, into thinking AI-generated ads are genuine human content. Here are some select quotes from the company’s promotional video:

“We run the only VC-backed bot farm in America. Because why let Russia and China have all the fun?”

“We didn't break the internet. It was broken to begin with. But now we're killing it entirely.”

“Welcome to the dead internet.”

A16z's Speedrun program invested $1 million in Doublespeed, a company that was recently covered in a blistering article by 404 Media, which reported: “Andreessen Horowitz is funding a company that clearly violates the inauthentic behavior policies of every major social media platform.”

Excerpts from Doublespeed’s website

The company’s business model relies on deception, designed to make social media platforms and their users believe AI-generated images and videos depict real people.

How do they do this? By selling access to “phone farms” that create and manage thousands of fake social media accounts to manipulate engagement metrics. The company's website is explicit, saying its product “mimics” the behavior of real people on social media in order to “get our content to appear human to the algorithms.”

“Yes, we built a phone farm (and its pretty sick),” said Doublespeed founder Zuhair Lakhani on X. The purpose was “replacing human creators with ai, mainly used for marketing.”

A photo of Doublespeed’s phone farms, shared by the founder Zuhair Lakhani on X.

They use thousands of real phones to pull this off because social media platforms like TikTok have policies against and methods to detect the mass generation and deployment of fake accounts.

The company has the accounts imitate human behavior before posting deceptive content. This means the fake accounts search specific keywords, scroll their “For You” pages, and use AI to analyze screenshots of content to determine whether to “repost it, comment on it” or “swipe away.”

A feed of AI-generated marketing content created by Doublespeed. Source: Superwall on YouTube

A selection of nearly identical Doublespeed-run TikTok accounts. Most posts involve the AI decoy complaining about any one of a number of medical issues. Then, the account lists a handful of cures, including a foam roller product from Doublespeed’s client. Source: Tiktok, Doublespeed on loom

This is all designed to circumvent platforms’ restrictions on fake content and then serve that fake content to unsuspecting real people.

In a podcast interview, Lakhani offered details about one of the company’s clients: “They're hitting like the old person niche, which is what I think is like the best niche to hit with AI content.”

Polling and research have found that older people are less likely to say they’ve heard about AI and more likely to fall for AI-generated misinformation.

Lakhani drew a parallel between this client and his prior work producing AI-generated marketing content at scale: “It was all like old person niche stuff. So like all supplements that would, you know, target old people, and that's when the commission would go crazy.”

“Those brands would tell you to do like, you know, make some like extremely crazy claims,” he said, “especially with supplements.” Lakhani added, “The supplement stuff should definitely be like kind of illegal. I don't know how that is allowed.”

Despite their founder stating that supplement ads should be illegal, Doublespeed isn’t shying away from them. In December 2025, a hacker gained access to Doublespeed’s entire backend and the leaked data showed what the AI-generated “influencers” were actually selling.

One account, “pattyluvslife,” featured an AI-generated woman claiming to be a UCLA student. The account criticized the supplement industry and pharmaceutical companies as fraudulent — while simultaneously promoting a herbal supplement from a brand called Rosabella.

Another account under the name “chloedav1s_” had uploaded some 200 posts featuring an AI-generated woman claiming to suffer from various health conditions and often pictured in a hospital bed. She ultimately promoted a specific company’s foam roller as a solution to her ailments.

A tweet from DoubleSpeed’s founder shows one of the company’s bot accounts messaging a user to promote the product. In the post, Lakhani boasted, “A couple of weeks ago, we gave the [AI] agents access to dm … This was for an ecommerce brand - out of 130 dms sent, 15 pointed to a conversion.”

Another image from Doublespeed’s platform showing their bot account, imitating a human and messaging users with medical conditions to promote the client’s foam roller product. Source: Zuhair Lakhani on X.

The Doublespeed hack revealed more than 1,100 phones and over 400 TikTok accounts operated by the company. Most of the accounts were promoting products without disclosing that the posts were paid advertisements — a violation of both TikTok's Community Guidelines, which require creators to label AI-generated content depicting realistic scenes, and FTC regulations, which require influencers to clearly disclose any “material connection” to a brand when endorsing products.

Doublespeed and a16z did not respond to 404 Media’s requests for comment. After 404 Media flagged the accounts to TikTok, the platform said it added labels indicating they were AI-generated. However, The Midas Project’s follow-up investigation has revealed that while labels have been added to some content from some Doublespeed-run accounts (including chloedav1s_), others with comparable reach and near-identical content still remain unlabeled (such as lilyw4tson and mia.garc1a), with most commenters appearing to believe the posts are authentic.

Cluely AI

A16z led a $15 million Series A in June 2025.

Cluely's official manifesto declares: “We want to cheat on everything. Yep, you heard that right. Sales calls. Meetings. Negotiations. If there's a faster way to win — we'll take it... So, start cheating. Because when everyone does, no one is.”

Cluely’s co-founders Neel Shanmugam (left), Roy Lee (center), and Alex Chen (right). Source: Cluely via Bloomberg.

Founder and CEO Roy Lee is no stranger to using AI to cheat. By his own admission to New York Magazine, while studying at Columbia, he used AI to cheat on “nearly every assignment,” estimating that ChatGPT wrote 80% of every essay he turned in. “At the end, I'd put on the finishing touches. I'd just insert 20 percent of my humanity, my voice, into it.”

In early 2025, Lee built Interview Coder, a tool that operates behind-the-scenes during technical coding interviews and feeds AI-generated solutions to users in real time. He recorded himself using it to pass Amazon's interview, received a job offer, publicly declined it with mockery, and posted the video to YouTube. He also claimed to receive offers from TikTok, Meta, and Capital One. Amazon reported him to Columbia. The university placed him on probation for “facilitation of academic dishonesty.”

“Even if I say extremely crazy shit online,” Lee has explained, “it will just make more people interested in me and the company and it will just drive more downloads and conversions and get more eyeballs onto Cluely.”

A marketing video for Cluely suggests that the product can be used discreetly to “cheat” on dates. Source: YouTube

Cluely's launch video demonstrated another of the product's intended use cases: dating. In it, Lee goes on a blind date and uses the tool to lie about his age, job, and interests. It has so far amassed 13 million views on X.

Under scrutiny, Cluely has quietly walked back some of its original positioning. The company scrubbed references to cheating on exams and job interviews from its website. By November, the company had repositioned itself as an AI meeting assistant and notetaker — entering a crowded market far from its provocative origins. Lee told TechCrunch that Cluely's “invisibility function is not a core feature” and that “most enterprises opt to disable the invisibility altogether because of legal implications.” Despite Lee’s claim that invisibility is not a core feature, the very first sentence of Cluely’s homepage advertises the product as “undetectable.”

Cluely’s home page at time of publication. Source: Cluely

Lee's stated goal was to “desensitize everyone to the phrase ‘cheating.’” If you say it enough, he argues, “cheat begins to lose its meaning.” A16z praised Lee's approach as “rooted in deliberate strategy and intentionality.”

While some companies, like Lyft, largely benefited everyday people while breaking rules around taxi regulation, Lee is interested in breaking something more fundamental: the shared understanding that lying and cheating is wrong.

Cluely AI announced a $15 million Series A led by a16z in June 2025. Both Cluely and Doublespeed share a common theory: that the basic rules governing social and professional life are obstacles to be overcome. A16z would seem to agree.

Gambling

Since a 2018 Supreme Court ruling, sports betting has proliferated in the U.S. Many of the impacts haven’t been pretty. Researchers have found evidence that the rise of easy access to gambling has pushed people into greater debt, been linked to violence, and increased strain on financially vulnerable households.

Meanwhile, a16z has invested in several gambling companies that use regulatory loopholes to reach users who would otherwise be protected by existing gambling laws.

Coverd

Coverd is pursuing a novel form of gambling. The company announced its app in March 2025, inviting users to “bet on your bills — OnlyFans, child support, and last night's Uber. Wipe them from your credit card by playing your favorite casino games.” The app syncs with your bank accounts and allows you to select individual transactions from your credit card bill and bet against them, gambling to potentially win back the value of the transaction (or, more realistically, to double your losses).

The company's CEO has stated openly, “We didn't build Coverd to help people inhibit their spending; we built it to make spending exciting. We let spenders win twice – the second time is when they play it back and win.”

A now-deleted advertisement for the Coverd app. Source: Coverd on X via Archive.is

This marketing likely appeals to people who are already stretched thin and desperate. Many customers may be financially vulnerable and willing to chase any way to erase expenses that they don’t know how to pay off.

But gambling is never a good approach to getting out of debt, as the leadership at Coverd and a16z surely know. The core business model of gambling is based around offering players negative expected value bets, but what keeps them playing is that near-miss outcomes activate the brain's dopamine system similarly to actual wins — and gambling games are often deliberately designed to produce these near-misses frequently. Combined with cognitive biases like selective memory and the gambler's fallacy, one study suggests 96% of long-term gamblers lose money.

Nonetheless, Coverd’s app store description describes the product as a way to make the user more financially savvy, suggesting that the app will help them improve their financial health. It reads: “Coverd makes everyday finance more engaging and interactive! See your spending habits, play games, and become more financially savvy! Win in-game tokens as you play and stay on top of your finances — all in one easy-to-use app. No purchase required, just a fresh take on financial awareness. Download Coverd and become money-smart today!”

The homepage of the app encourages the user to link their credit card to “bring your spending insights to the next level.” An in-app advertisement for an upcoming Coverd-branded credit card suggests that users will receive “up to 100% cash back” on their purchases.

Coverd raised $7.8 million in seed funding with a16z participation and a16z partner Anish Acharya sits on the board.

Edgar

The homepage for Edgar. Source: Edgar.co

How do you build a casino that’s not a casino? The company Edgar, a part of a16z’s portfolio, thinks it has found the answer in its game BettySweeps, launched in January 2025.

Edgar calls it “America's #1 social casino for slot lovers!”

This game uses a trick common among sweepstakes casinos — using two different currencies. By making a purchase, players receive “Betty Coins” for entertainment, as well as a “bonus” gift of “Sweepstakes Coins” that can be gambled and redeemed for cash prizes. The company claims no purchase is necessary to play — but multiple states have concluded that such models constitute illegal gambling regardless.

In August 2025, Arizona's Department of Gaming issued cease-and-desist orders to BettySweeps and three other sweepstakes operators. The department accused them of operating “felony criminal enterprises” and ordered them to “desist from any future illegal gambling operations or activities of any type in Arizona.”

The company exited California ahead of that state's sweepstakes ban which took effect in January 2026. BettySweeps is now restricted in 15 states: Arizona, California, Connecticut, Delaware, Idaho, Kentucky, Louisiana, Maryland, Michigan, Montana, Nevada, New Jersey, New York, Washington, and West Virginia.

Edgar also operates a separate real-money online casino in Ontario, Canada — where it is properly licensed by the Alcohol and Gaming Commission of Ontario. The company evidently knows how to obtain gambling licenses and comply with regulations when it chooses to. In the United States, it chose a different path.

Cheddr

On a16z's own Speedrun accelerator website, Cheddr is described as “building the TikTok of sports wagering.”

The company wants to push the frontier of sports betting across the country, targeting 46 states even though only approximately 34 have legalized online sports betting. It’s also targeting its app to users under age 21. To do this, the company is exploiting the same sweepstakes law loophole that Edgar uses. This lets Cheddr offer sports betting that supposedly isn’t “gambling” in the eye of regulators.

The promotional video shows users swiping through rapid-fire prop bets during live games; “it’s sports wagering at the pace of a slot machine,” the video says.

A now-unlisted YouTube ad for Cheddr. Source: Jason Krupat via Youtube

There are good reasons lawmakers have been reluctant to open up gambling to 18-year-olds. Researchers have found that teenagers are roughly twice as likely as adults to develop gambling disorders.

But perhaps that’s the point. Just as cigarette and alcohol companies have been happy to get customers addicted to their products while young, Cheddr may be hoping its TikTok-style engagement mechanics will start forming lifelong gambling habits in their youngest users. Why else combine the already addictive features of TikTok with the notoriously addictive habit of gambling?

Concerns about this product have grown so severe that California's Governor Newsom recently signed legislation banning sweepstakes gambling platforms such as Cheddr.

Sleeper

A16z led a $20 million Series B in May 2020 and participated in a $40 million Series C in September 2021.

Andreessen Horowitz has invested over $60 million in Sleeper, a fantasy sports platform. A16z General Partner Andrew Chen, who sits on the board of the startup, has praised Sleeper's “stickiness metrics” — the same engagement patterns that researchers associate with habit formation and addiction.

Like Cheddr, Coverd, and Edgar, Sleeper has found a strategy allowing it to largely evade existing gambling restrictions.

It is technically operating a daily fantasy sports game (DFS). Users can win or lose money on the basis of the performance of individual players they’ve selected before a match, rather than the outcome of the match itself. Some argue this makes it a game of skill, not chance, allowing it to legally operate with real money wagers.

The company now faces class action lawsuits in California and Massachusetts alleging that its app is an illegal gambling operation. California’s attorney general declared in July 2025 that daily fantasy sports constituted unlawful wagering under state law: “We conclude that participants in both types of daily fantasy sports games — pick’em and draft-style games — make ‘bets’ on sporting events in violation of section 337a.”

New York banned Sleeper's pick'em games in 2023; Michigan enacted a similar prohibition. Florida and Wyoming have issued cease-and-desist orders to pick'em operators.

An advertisement for Sleeper on a San Francisco bus, suggesting “massive income” for users. Source: @Alexeyguzey on X